Sitemap

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Pages

Posts

Future Blog Post

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Blog Post number 4

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 3

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 2

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 1

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

portfolio

Portfolio item number 1

Short description of portfolio item number 1

Portfolio item number 2

Short description of portfolio item number 2

publications

Ultra-High Resolution 3D Imaging of Whole Cells

Published in , 2016

Fluorescence nanoscopy, or super-resolution microscopy, has become an important tool in cell biological research. However, because of its usually inferior resolution in the depth direction (50–80 nm) and rapidly deteriorating resolution in thick samples, its practical biological application has been effectively limited to two dimensions and thin samples. Here, we present the development of whole-cell 4Pi single-molecule switching nanoscopy (W-4PiSMSN), an optical nanoscope that allows imaging of three-dimensional (3D) structures at 10- to 20-nm resolution throughout entire mammalian cells. We demonstrate the wide applicability of W-4PiSMSN across diverse research fields by imaging complex molecular architectures ranging from bacteriophages to nuclear pores, cilia, and synaptonemal complexes in large 3D cellular volumes.

Download here

Leveraging Statistical Multi-Agent Online Planning with Emergent Value Function Approximation

Published in , 2018

Making decisions is a great challenge in distributed autonomous environments due to enormous state spaces and uncertainty. Many online planning algorithms rely on statistical sampling to avoid searching the whole state space, while still being able to make acceptable decisions. However, planning often has to be performed under strict computational constraints making online planning in multi-agent systems highly limited, which could lead to poor system performance, especially in stochastic domains. In this paper, we propose Emergent Value function Approximation for Distributed Environments (EVADE), an approach to integrate global experience into multi-agent online planning in stochastic domains to consider global effects during local planning. For this purpose, a value function is approximated online based on the emergent system behaviour by using methods of reinforcement learning. We empirically evaluated EVADE with two statistical multi-agent online planning algorithms in a highly complex and stochastic smart factory environment, where multiple agents need to process various items at a shared set of machines. Our experiments show that EVADE can effectively improve the performance of multi-agent online planning while offering efficiency w.r.t. the breadth and depth of the planning process.

Download here

Action Markets in Deep Multi-Agent Reinforcement Learning

Published in , 2018

Recent work on learning in multi-agent systems (MAS) is concerned with the ability of self-interested agents to learn cooperative behavior. In many settings such as resource allocation tasks the lack of cooperative behavior can be seen as a consequence of wrong incentives. I.e., when agents can not freely exchange their resources then greediness is not uncooperative but only a consequence of reward maximization. In this work, we show how the introduction of markets helps to reduce the negative effects of individual reward maximization. To study the emergence of trading behavior in MAS we use Deep Reinforcement Learning (RL) where agents are self-interested, independent learners represented through Deep Q-Networks (DQNs). Specifically, we propose Action Traders, referring to agents that can trade their atomic actions in exchange for environmental reward. For empirical evaluation we implemented action trading in the Coin Game – and find that trading significantly increases social efficiency in terms of overall reward compared to agents without action trading.

Download here

Preparing for the Unexpected: Diversity Improves Planning Resilience in Evolutionary Algorithms

Published in , 2018

As automatic optimization techniques find their way into industrial applications, the behavior of many complex systems is determined by some form of planner picking the right actions to optimize a given objective function. In many cases, the mapping of plans to objective reward may change due to unforeseen events or circumstances in the real world. In those cases, the planner usually needs some additional effort to adjust to the changed situation and reach its previous level of performance. Whenever we still need to continue polling the planner even during re-planning, it oftentimes exhibits severely lacking performance. In order to improve the planner’s resilience to unforeseen change, we argue that maintaining a certain level of diversity amongst the considered plans at all times should be added to the planner’s objective. Effectively, we encourage the planner to keep alternative plans to its currently best solution. As an example case, we implement a diversity-aware genetic algorithm using two different metrics for diversity (differing in their generality) and show that the blow in performance due to unexpected change can be severely lessened in the average case. We also analyze the parameter settings necessary for these techniques in order to gain an intuition how they can be incorporated into larger frameworks or process models for software and systems engineering.

Download here

The Sharer’s Dilemma in Collective Adaptive Systems of Self-Interested Agents

Published in , 2018

In collective adaptive systems (CAS), adaptation can be implemented by optimization wrt. utility. Agents in a CAS may be self-interested, while their utilities may depend on other agents’ choices. Independent optimization of agent utilities may yield poor individual and global reward due to locally interfering individual preferences. Joint optimization may scale poorly, and is impossible if agents cannot expose their preferences due to privacy or security issues.In this paper, we study utility sharing for mitigating this issue. Sharing utility with others may incentivize individuals to consider choices that are locally suboptimal but increase global reward. We illustrate our approach with a utility sharing variant of distributed cross entropy optimization. Empirical results show that utility sharing increases expected individual and global payoff in comparison to optimization without utility sharing.We also investigate the effect of greedy defectors in a CAS of sharing, self-interested agents. We observe that defection increases the mean expected individual payoff at the expense of sharing individuals’ payoff. We empirically show that the choice between defection and sharing yields a fundamental dilemma for self-interested agents in a CAS.

Download here

Memory Bounded Open-Loop Planning in Large POMDPs Using Thompson Sampling

Published in , 2019

State-of-the-art approaches to partially observable planning like POMCP are based on stochastic tree search. While these approaches are computationally efficient, they may still construct search trees of considerable size, which could limit the performance due to restricted memory resources. In this paper, we propose Partially Observable Stacked Thompson Sampling (POSTS), a memory bounded approach to openloop planning in large POMDPs, which optimizes a fixed size stack of Thompson Sampling bandits. We empirically evaluate POSTS in four large benchmark problems and compare its performance with different tree-based approaches. We show that POSTS achieves competitive performance compared to tree-based open-loop planning and offers a performance memory tradeoff, making it suitable for partially observable planning with highly restricted computational and memory resources.

Download here

Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies

Published in , 2019

We propose Strong Emergent Policy (STEP) approximation, a scalable approach to learn strong decentralized policies for cooperative MAS with a distributed variant of policy iteration. For that, we use function approximation to learn from action recommendations of a decentralized multi-agent planning algorithm. STEP combines decentralized multi-agent planning with centralized learning, only requiring a generative model for distributed black box optimization. We experimentally evaluate STEP in two challenging and stochastic domains with large state and joint action spaces and show that STEP is able to learn stronger policies than standard multi-agent reinforcement learning algorithms, when combining multi-agent open-loop planning with centralized function approximation. The learned policies can be reintegrated into the multi-agent planning process to further improve performance.

Download here

Scenario Co-Evolution for Reinforcement Learning on a Grid World Smart Factory Domain

Published in , 2019

Adversarial learning has been established as a successful paradigm in reinforcement learning. We propose a hybrid adversarial learner where a reinforcement learning agent tries to solve a problem while an evolutionary algorithm tries to find problem instances that are hard to solve for the current expertise of the agent, causing the intelligent agent to co-evolve with a set of test instances or scenarios. We apply this setup, called scenario co-evolution, to a simulated smart factory problem that combines task scheduling with navigation of a grid world. We show that the so trained agent outperforms conventional reinforcement learning. We also show that the scenarios evolved this way can provide useful test cases for the evaluation of any (however trained) agent.

Download here

Emergent Escape-based Flocking Behavior Using Multi-Agent Reinforcement Learning

Published in , 2019

In nature, flocking or swarm behavior is observed in many species as it has beneficial properties like reducing the probability of being caught by a predator. In this paper, we propose SELFish (Swarm Emergent Learning Fish), an approach with multiple autonomous agents which can freely move in a continuous space with the objective to avoid being caught by a present predator. The predator has the property that it might get distracted by multiple possible preys in its vicinity. We show that this property in interaction with self-interested agents which are trained with reinforcement learning solely to survive as long as possible leads to flocking behavior similar to Boids, a common simulation for flocking behavior. Furthermore we present interesting insights into the swarming behavior and into the process of agents being caught in our modeled environment.

Download here

Subgoal-Based Temporal Abstraction in Monte-Carlo Tree Search

Published in , 2019

We propose an approach to general subgoal-based temporal abstraction in MCTS. Our approach approximates a set of available macro-actions locally for each state only requiring a generative model and a subgoal predicate. For that, we modify the expansion step of MCTS to automatically discover and optimize macro-actions that lead to subgoals. We empirically evaluate the effectiveness, computational efficiency and robustness of our approach w.r.t. different parameter settings in two benchmark domains and compare the results to standard MCTS without temporal abstraction.

Download here

Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning

Published in , 2019

We propose Stable Yet Memory Bounded Open-Loop (SYMBOL) planning, a general memory bounded approach to partially observable open-loop planning. SYMBOL maintains an adaptive stack of Thompson Sampling bandits, whose size is bounded by the planning horizon and can be automatically adapted according to the underlying domain without any prior domain knowledge beyond a generative model. We empirically test SYMBOL in four large POMDP benchmark problems to demonstrate its effectiveness and robustness w.r.t. the choice of hyperparameters and evaluate its adaptive memory consumption. We also compare its performance with other open-loop planning algorithms and POMCP.

Download here

Uncertainty-Based Out-of-Distribution Detection in Deep Reinforcement Learning

Published in , 2019

We consider the problem of detecting out-of-distribution (OOD) samples in deep reinforcement learning. In a value based reinforcement learning setting, we propose to use uncertainty estimation techniques directly on the agent’s value estimating neural network to detect OOD samples. The focus of our work lies in analyzing the suitability of approximate Bayesian inference methods and related ensembling techniques that generate uncertainty estimates. Although prior work has shown that dropout-based variational inference techniques and bootstrap-based approaches can be used to model epistemic uncertainty, the suitability for detecting OOD samples in deep reinforcement learning remains an open question. Our results show that uncertainty estimation can be used to differentiate in- from out-of-distribution samples. Over the complete training process of the reinforcement learning agents, bootstrap-based approaches tend to produce more reliable epistemic uncertainty estimates, when compared to dropout-based approaches.

Download here

The Scenario Coevolution Paradigm: Adaptive Quality Assurance for Adaptive Systems

Published in , 2020

Systems are becoming increasingly more adaptive, using techniques like machine learning to enhance their behavior on their own rather than only through human developers programming them. We analyze the impact the advent of these new techniques has on the discipline of rigorous software engineering, especially on the issue of quality assurance. To this end, we provide a general description of the processes related to machine learning and embed them into a formal framework for the analysis of adaptivity, recognizing that to test an adaptive system a new approach to adaptive testing is necessary. We introduce scenario coevolution as a design pattern describing how system and test can work as antagonists in the process of software evolution. While the general pattern applies to large-scale processes (including human developers further augmenting the system), we show all techniques on a smaller-scale example of an agent navigating a simple smart factory. We point out new aspects in software engineering for adaptive systems that may be tackled naturally using scenario coevolution. This work is a substantially extended take on Gabor et al. (International symposium on leveraging applications of formal methods, Springer, pp 137–154, 2018)

Download here

Uncertainty-Based Out-of-Distribution Classification in Deep Reinforcement Learning

Published in , 2020

Robustness to out-of-distribution (OOD) data is an important goal in building reliable machine learning systems. Especially in autonomous systems, wrong predictions for OOD inputs can cause safety critical situations. As a first step towards a solution, we consider the problem of detecting such data in a value-based deep reinforcement learning (RL) setting. Modelling this problem as a one-class classification problem, we propose a framework for uncertainty-based OOD classification: UBOOD. It is based on the effect that an agent’s epistemic uncertainty is reduced for situations encountered during training (in-distribution), and thus lower than for unencountered (OOD) situations. Being agnostic towards the approach used for estimating epistemic uncertainty, combinations with different uncertainty estimation methods, e.g. approximate Bayesian inference methods or ensembling techniques are possible. We further present a first viable solution for calculating a dynamic classification threshold, based on the uncertainty distribution of the training data. Evaluation shows that the framework produces reliable classification results when combined with ensemble-based estimators, while the combination with concrete dropout-based estimators fails to reliably detect OOD situations. In summary, UBOOD presents a viable approach for OOD classification in deep RL settings by leveraging the epistemic uncertainty of the agent’s value function.

Download here

Learning and Testing Resilience in Cooperative Multi-Agent Systems

Published in , 2020

State-of-the-art multi-agent reinforcement learning has achieved remarkable success in recent years. The success has been mainly based on the assumption that all teammates perfectly cooperate to optimize a global objective in order to achieve a common goal. While this may be true in the ideal case, these approaches could fail in practice, since in multi-agent systems (MAS), all agents may be a potential source of failure. In this paper, we focus on resilience in cooperative MAS and propose an Antagonist-Ratio Training Scheme (ARTS) by reformulating the original target MAS as a mixed cooperative-competitive game between a group of protagonists which represent agents of the target MAS and a group of antagonists which represent failures in the MAS. While the protagonists can learn robust policies to ensure resilience against failures, the antagonists can learn malicious behavior to provide an adequate test suite for other MAS. We empirically evaluate ARTS in a cyber physical production domain and show the effectiveness of ARTS w.r.t. resilience and testing capabilities.

Download here

A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation

Published in , 2020

We propose Stable Emergent Policy (STEP) approximation, a distributed policy iteration scheme to stably approximate decentralized policies for partially observable and cooperative multi-agent systems. STEP offers a novel training architecture, where function approximation is used to learn from action recommendations of a decentralized planning algorithm. Planning is enabled by exploiting a training simulator, which is assumed to be available during centralized learning, and further enhanced by reintegrating the learned policies. We experimentally evaluate STEP in two challenging and stochastic domains, and compare its performance with state-of-the-art multi-agent reinforcement learning algorithms.

Download here

Foraging Swarms using Multi-Agent Reinforcement Learning

Published in , 2020

Flocking or swarm behavior is a widely observed phenomenon in nature. Although the entities might have self-interested goals like evading predators or foraging, they group themselves together because a collaborative observation is superior to the observation of a single individual. In this paper, we evaluate the emergence of swarms in a foraging task using multi-agent reinforcement learning (MARL). Every individual can move freely in a continuous space with the objective to follow a moving target object in a partially observable environment. The individuals are self-interested as there is no explicit incentive to collaborate with each other. However, our evaluation shows that these individuals learn to form swarms out of self-interest and learn to orient themselves to each other in order to find the target object even when it is out of sight for most individuals.

Download here

Towards Ecosystem Management from Greedy Reinforcement Learning in a Predator-Prey Setting

Published in , 2020

This paper applies reinforcement learning to train a predator to hunt multiple prey, which are able to reproduce, in a 2D simulation. It is shown that, using methods of curriculum learning, long-term reward discounting and stacked observations, a reinforcement-learning-based predator can achieve an economic strategy: Only hunt when there is still prey left to reproduce in order to maintain the population. Hence, purely selfish goals are sufficient to motivate a reinforcement learning agent for long-term planning and keeping a certain balance with its environment by not depleting its resources. While a comparably simple reinforcement learning algorithm achieves such behavior in the present scenario, providing a suitable amount of past and predictive information turns out to be crucial for the training success.

Download here

A Quantum Annealing Algorithm for Finding Pure Nash Equilibria in Graphical Games

Published in , 2020

We introduce Q-Nash, a quantum annealing algorithm for the NP-complete problem of finding pure Nash equilibria in graphical games. The algorithm consists of two phases. The first phase determines all combinations of best response strategies for each player using classical computation. The second phase finds pure Nash equilibria using a quantum annealing device by mapping the computed combinations to a quadratic unconstrained binary optimization formulation based on the Set Cover problem. We empirically evaluate Q-Nash on D-Wave’s Quantum Annealer 2000Q using different graphical game topologies. The results with respect to solution quality and computing time are compared to a Brute Force algorithm and the Iterated Best Response heuristic.

Download here

The Holy Grail of Quantum Artificial Intelligence: Major Challenges in Accelerating the Machine Learning Pipeline

Published in , 2020

State-of-the-art multi-agent reinforcement learning has achieved remarkable success in recent years. The success has been mainly based on the assumption that all teammates perfectly cooperate to optimize a global objective in order to achieve a common goal. While this may be true in the ideal case, these approaches could fail in practice, since in multi-agent systems (MAS), all agents may be a potential source of failure. In this paper, we focus on resilience in cooperative MAS and propose an Antagonist-Ratio Training Scheme (ARTS) by reformulating the original target MAS as a mixed cooperative-competitive game between a group of protagonists which represent agents of the target MAS and a group of antagonists which represent failures in the MAS. While the protagonists can learn robust policies to ensure resilience against failures, the antagonists can learn malicious behavior to provide an adequate test suite for other MAS. We empirically evaluate ARTS in a cyber physical production domain and show the effectiveness of ARTS w.r.t. resilience and testing capabilities.

Download here

Cross Entropy Hyperparameter Optimization for Constrained Problem Hamiltonians Applied to QAOA

Published in , 2020

Hybrid quantum-classical algorithms such as the Quantum Approximate Optimization Algorithm (QAOA) are considered as one of the most encouraging approaches for taking advantage of near-term quantum computers in practical applications. Such algorithms are usually implemented in a variational form, combining a classical optimization method with a quantum machine to find good solutions to an optimization problem. The solution quality of QAOA depends to a high degree on the parameters chosen by the classical optimizer at each iteration. However, the solution landscape of those parameters is highly multi-dimensional and contains many low-quality local optima. In this study we apply a Cross-Entropy method to shape this landscape, which allows the classical optimizer to find better parameter more easily and hence results in an improved performance. We empirically demonstrate that this approach can reach a significant better solution quality for the Knapsack Problem.

Download here

Productive Fitness in Diversity-Aware Evolutionary Algorithms

Published in , 2021

In evolutionary algorithms, the notion of diversity has been adopted from biology and is used to describe the distribution of a population of solution candidates. While it has been known that maintaining a reasonable amount of diversity often benefits the overall result of the evolutionary optimization process by adjusting the exploration/exploitation trade-off, little has been known about what diversity is optimal. We introduce the notion of productive fitness based on the effect that a specific solution candidate has some generations down the evolutionary path. We derive the notion of final productive fitness, which is the ideal target fitness for any evolutionary process. Although it is inefficient to compute, we show empirically that it allows for an a posteriori analysis of how well a given evolutionary optimization process hit the ideal exploration/exploitation trade-off, providing insight into why diversity-aware evolutionary optimization often performs better.

Download here

Resilient Multi-Agent Reinforcement Learning with Adversarial Value Decomposition

Published in , 2021

We focus on resilience in cooperative multi-agent systems, where agents can change their behavior due to udpates or failures of hardware and software components. Current state-of-the-art approaches to cooperative multi-agent reinforcement learning (MARL) have either focused on idealized settings without any changes or on very specialized scenarios, where the number of changing agents is fixed, e.g., in extreme cases with only one productive agent. Therefore, we propose Resilient Adversarial value Decomposition with Antagonist-Ratios (RADAR). RADAR offers a value decomposition scheme to train competing teams of varying size for improved resilience against arbitrary agent changes. We evaluate RADAR in two cooperative multi-agent domains and show that RADAR achieves better worst case performance w.r.t. arbitrary agent changes than state-of-the-art MARL.

Download here

SAT-MARL: Specification Aware Training in Multi-Agent Reinforcement Learning

Published in , 2021

A characteristic of reinforcement learning is the ability to develop unforeseen strategies when solving problems. While such strategies sometimes yield superior performance, they may also result in undesired or even dangerous behavior. In industrial scenarios, a system’s behavior also needs to be predictable and lie within defined ranges. To enable the agents to learn (how) to align with a given specification, this paper proposes to explicitly transfer functional and non-functional requirements into shaped rewards. Experiments are carried out on the smart factory, a multi-agent environment modeling an industrial lot-size-one production facility, with up to eight agents and different multi-agent reinforcement learning algorithms. Results indicate that compliance with functional and non-functional constraints can be achieved by the proposed approach.

Download here

A Sustainable Ecosystem through Emergent Cooperation in Multi-Agent Reinforcement Learning

Published in , 2021

This paper applies reinforcement learning to train a predator to hunt multiple prey, which are able to reproduce, in a 2D simulation. It is shown that, using methods of curriculum learning, long-term reward discounting and stacked observations, a reinforcement-learning-based predator can achieve an economic strategy: Only hunt when there is still prey left to reproduce in order to maintain the population. Hence, purely selfish goals are sufficient to motivate a reinforcement learning agent for long-term planning and keeping a certain balance with its environment by not depleting its resources. While a comparably simple reinforcement learning algorithm achieves such behavior in the present scenario, providing a suitable amount of past and predictive information turns out to be crucial for the training success.

Download here

VAST: Value Function Factorization with Variable Agent Sub-Teams

Published in , 2021

Value function factorization (VFF) is a popular approach to cooperative multi-agent reinforcement learning in order to learn local value functions from global rewards. However, state-of-the-art VFF is limited to a handful of agents in most domains. We hypothesize that this is due to the flat factorization scheme, where the VFF operator becomes a performance bottleneck with an increasing number of agents. Therefore, we propose VFF with variable agent sub-teams (VAST). VAST approximates a factorization for sub-teams which can be defined in an arbitrary way and vary over time, e.g., to adapt to different situations. The sub-team values are then linearly decomposed for all sub-team members. Thus, VAST can learn on a more focused and compact input representation of the original VFF operator. We evaluate VAST in three multi-agent domains and show that VAST can significantly outperform state-of-the-art VFF, when the number of agents is sufficiently large.

Download here

Specification Aware Multi-Agent Reinforcement Learning

Published in , 2022

Engineering intelligent industrial systems is challenging due to high complexity and uncertainty with respect to domain dynamics and multiple agents. If industrial systems act autonomously, their choices and results must be within specified bounds to satisfy these requirements. Reinforcement learning (RL) is promising to find solutions that outperform known or handcrafted heuristics. However in industrial scenarios, it also is crucial to prevent RL from inducing potentially undesired or even dangerous behavior. This paper considers specification alignment in industrial scenarios with multi-agent reinforcement learning (MARL). We propose to embed functional and non-functional requirements into the reward function, enabling the agents to learn to align with the specification. We evaluate our approach in a smart factory simulation representing an industrial lot-size-one production facility, where we train up to eight agents using DQN, VDN, and QMIX. Our results show that the proposed approach enables agents to satisfy a given set of requirements.

Download here

Towards Anomaly Detection in Reinforcement Learning

Published in , 2022

Identifying datapoints that substantially differ from normality is the task of anomaly detection (AD). While AD has gained widespread attention in rich data domains such as images, videos, audio and text, it has has been studied less frequently in the context of reinforcement learning (RL). This is due to the additional layer of complexity that RL introduces through sequential decision making. Developing suitable anomaly detectors for RL is of particular importance in safety-critical scenarios where acting on anomalous data could result in hazardous situations. In this work, we address the question of what AD means in the context of RL. We found that current research trains and evaluates on overly simplistic and unrealistic scenarios which reduce to classic pattern recognition tasks. We link AD in RL to various fields in RL such as lifelong RL and generalization. We discuss their similarities, differences, and how the fields can benefit from each other. Moreover, we identify non-stationarity to be one of the key drivers for future research on AD in RL and make a first step towards a more formal treatment of the problem by framing it in terms of the recently introduced block contextual Markov decision process. Finally, we define a list of practical desiderata for future problems.

Download here

Emergent Cooperation from Mutual Acknowledgment Exchange

Published in , 2022

Peer incentivization (PI) is a recent approach, where all agents learn to reward or to penalize each other in a distributed fashion which often leads to emergent cooperation. Current PI mechanisms implicitly assume a flawless communication channel in order to exchange rewards. These rewards are directly integrated into the learning process without any chance to respond with feedback. Furthermore, most PI approaches rely on global information which limits scalability and applicability to real-world scenarios, where only local information is accessible. In this paper, we propose Mutual Acknowledgment Token Exchange (MATE), a PI approach defined by a two-phase communication protocol to mutually exchange acknowledgment tokens to shape individual rewards. Each agent evaluates the monotonic improvement of its individual situation in order to accept or reject acknowledgment requests from other agents. MATE is completely decentralized and only requires local communication and information. We evaluate MATE in three social dilemma domains. Our results show that MATE is able to achieve and maintain significantly higher levels of cooperation than previous PI approaches. In addition, we evaluate the robustness of MATE in more realistic scenarios, where agents can defect from the protocol and where communication failures can occur.

Download here

Capturing Dependencies Within Machine Learning via a Formal Process Model

Published in , 2022

The development of Machine Learning (ML) models is more than just a special case of software development (SD): ML models acquire properties and fulfill requirements even without direct human interaction in a seemingly uncontrollable manner. Nonetheless, the underlying processes can be described in a formal way. We define a comprehensive SD process model for ML that encompasses most tasks and artifacts described in the literature in a consistent way. In addition to the production of the necessary artifacts, we also focus on generating and validating fitting descriptions in the form of specifications. We stress the importance of further evolving the ML model throughout its life-cycle even after initial training and testing. Thus, we provide various interaction points with standard SD processes in which ML often is an encapsulated task. Further, our SD process model allows to formulate ML as a (meta-) optimization problem. If automated rigorously, it can be used to realize self-adaptive autonomous systems. Finally, our SD process model features a description of time that allows to reason about the progress within ML development processes. This might lead to further applications of formal methods within the field of ML.

Download here

Attention-Based Recurrency for Multi-Agent Reinforcement Learning under State Uncertainty

Published in , 2023

State uncertainty poses a major challenge for decentralized coordination but is largely neglected in state-of-the-art research due to a strong focus on state-based centralized training for decentralized execution (CTDE) and benchmarks that lack sufficient stochasticity like StarCraft Multi-Agent Challenge (SMAC). In this paper, we propose Attention-based Embeddings of Recurrence In multi-Agent Learning (AERIAL) to approximate value functions under agent-wise state uncertainty. AERIAL replaces the true state with a learned representation of multi-agent recurrence, considering more accurate information about decentralized agent decisions than state-based CTDE. We then introduce MessySMAC, a modified version of SMAC with stochastic observations and higher variance in initial states, to provide a more general and configurable benchmark regarding state uncertainty. We evaluate AERIAL in Dec-Tiger as well as in a variety of SMAC and MessySMAC maps, and compare the results with state-based CTDE. Furthermore, we evaluate the robustness of AERIAL and state-based CTDE against various state uncertainty configurations in MessySMAC.

Download here

DIRECT: Learning from Sparse and Shifting Rewards Using Discriminative Reward Co-Training

Published in , 2023

We propose discriminative reward co-training (DIRECT) as an extension to deep reinforcement learning algorithms. Building upon the concept of self-imitation learning (SIL), we introduce an imitation buffer to store beneficial trajectories generated by the policy determined by their return. A discriminator network is trained concurrently to the policy to distinguish between trajectories generated by the current policy and beneficial trajectories generated by previous policies. The discriminator’s verdict is used to construct a reward signal for optimizing the policy. By interpolating prior experience, DIRECT is able to act as a surrogate, steering policy optimization towards more valuable regions of the reward landscape thus learning an optimal policy. Our results show that DIRECT outperforms state-of-the-art algorithms in sparse- and shifting-reward environments being able to provide a surrogate reward to the policy and direct the optimization towards valuable areas.

Download here

Adaptive Bi-Nonlinear Neural Networks Based on Complex Numbers with Weights Constrained along the Unit Circle

Published in , 2023

Traditional real-valued neural networks can suppress neural inputs by setting the weights to zero or overshadow other inputs by using extreme weight values. Large network weights are undesirable because they may cause network instability and lead to exploding gradients. To penalize such large weights, adequate regularization is typically required. This work presents a feed-forward and convolutional layer architecture that constrains weights along the unit circle such that neural connections can never be eliminated or suppressed by weights, ensuring that no incoming information is lost by dying neurons. The neural network’s decision boundaries are redefined by expressing model weights as angles of phase rotations and layer inputs as amplitude modulations, with trainable weights always remaining within a fixed range. The approach can be quickly and readily integrated into existing layers while preserving the model architecture of the original network. The classification performance was tested and assessed on basic computer vision data sets using ShuffleNetv2, ResNet18, and GoogLeNet at high learning rates.

Download here

Emergence and Resilience in Multi-Agent Reinforcement Learning

Published in , 2023

Our world represents an enormous multi-agent system (MAS), consisting of a plethora of agents that make decisions under uncertainty to achieve certain goals. The interaction of agents constantly affects our world in various ways, leading to the emergence of interesting phenomena like life forms and civilizations that can last for many years while withstanding various kinds of disturbances. Building artificial MAS that are able to adapt and survive similarly to natural MAS is a major goal in artificial intelligence as a wide range of potential real-world applications like autonomous driving, multi-robot warehouses, and cyber-physical production systems can be straightforwardly modeled as MAS. Multi-agent reinforcement learning (MARL) is a promising approach to build such systems which has achieved remarkable progress in recent years. However, state-of-the-art MARL commonly assumes very idealized conditions to optimize performance in best-case scenarios while neglecting further aspects that are relevant to the real world. In this thesis, we address emergence and resilience in MARL which are important aspects to build artificial MAS that adapt and survive as effectively as natural MAS do. We first focus on emergent cooperation from local interaction of self-interested agents and introduce a peer incentivization approach based on mutual acknowledgments. We then propose to exploit emergent phenomena to further improve coordination in large cooperative MAS via decentralized planning or hierarchical value function factorization. To maintain multi-agent coordination in the presence of partial changes similar to classic distributed systems, we present adversarial methods to improve and evaluate resilience in MARL. Finally, we briefly cover a selection of further topics that are relevant to advance MARL towards real-world applicability.

Download here

Social Neural Network Soups with Surprise Minimization

Published in , 2023

A recent branch of research in artificial life has constructed artificial chemistry systems whose particles are dynamic neural networks. These particles can be applied to each other and show a tendency towards self-replication of their weight values. We define new interactions for said particles that allow them to recognize one another and learn predictors for each other’s behavior. For instance, each particle minimizes its surprise when observing another particle’s behavior. Given a special catalyst particle to exert evolutionary selection pressure on the soup of particles, these ‘social’ interactions are sufficient to produce emergent behavior similar to the stability-pattern previously only achieved via explicit self-replication training.

Download here

Attention-Based Recurrence for Multi-Agent Reinforcement Learning under Stochastic Partial Observability

Published in , 2023

Stochastic partial observability poses a major challenge for decentralized coordination in multi-agent reinforcement learning but is largely neglected in state-of-the-art research due to a strong focus on state-based centralized training for decentralized execution (CTDE) and benchmarks that lack sufficient stochasticity like StarCraft Multi-Agent Challenge (SMAC). In this paper, we propose Attention-based Embeddings of Recurrence In multi-Agent Learning (AERIAL) to approximate value functions under stochastic partial observability. AERIAL replaces the true state with a learned representation of multi-agent recurrence, considering more accurate information about decentralized agent decisions than state-based CTDE. We then introduce MessySMAC, a modified version of SMAC with stochastic observations and higher variance in initial states, to provide a more general and configurable benchmark regarding stochastic partial observability. We evaluate AERIAL in Dec-Tiger as well as in a variety of SMAC and MessySMAC maps, and compare the results with state-based CTDE. Furthermore, we evaluate the robustness of AERIAL and state-based CTDE against various stochasticity configurations in MessySMAC.

Download here

CROP: Towards Distributional-Shift Robust Reinforcement Learning Using Compact Reshaped Observation Processing

Published in , 2023

The safe application of reinforcement learning (RL) requires generalization from limited training data to unseen scenarios. Yet, fulfilling tasks under changing circumstances is a key challenge in RL. Current state-of-the-art approaches for generalization apply data augmentation techniques to increase the diversity of training data. Even though this prevents overfitting to the training environment(s), it hinders policy optimization. Crafting a suitable observation, only containing crucial information, has been shown to be a challenging task itself. To improve data efficiency and generalization capabilities, we propose Compact Reshaped Observation Processing (CROP) to reduce the state information used for policy optimization. By providing only relevant information, overfitting to a specific training layout is precluded and generalization to unseen environments is improved. We formulate three CROPs that can be applied to fully observable observation- and action-spaces and provide methodical foundation. We empirically show the improvements of CROP in a distributionally shifted safety gridworld. We furthermore provide benchmark comparisons to full observability and data-augmentation in two different-sized procedurally generated mazes.

Download here

Multi-Agent Quantum Reinforcement Learning using Evolutionary Optimization

Published in , 2024

Multi-Agent Reinforcement Learning is becoming increasingly more important in times of autonomous driving and other smart industrial applications. Simultaneously a promising new approach to Reinforcement Learning arises using the inherent properties of quantum mechanics, reducing the trainable parameters of a model significantly. However, gradient-based Multi-Agent Quantum Reinforcement Learning methods often have to struggle with barren plateaus, holding them back from matching the performance of classical approaches. We build upon an existing approach for gradient free Quantum Reinforcement Learning and propose three genetic variations with Variational Quantum Circuits for Multi-Agent Reinforcement Learning using evolutionary optimization. We evaluate our genetic variations in the Coin Game environment and also compare them to classical approaches. We showed that our Variational Quantum Circuit approaches perform significantly better compared to a neural network with a similar amount of trainable parameters. Compared to the larger neural network, our approaches archive similar results using 97.88% less parameters.

Download here

Adaptive Anytime Multi-Agent Path Finding Using Bandit-Based Large Neighborhood Search

Published in , 2024

Anytime multi-agent path finding (MAPF) is a promising approach to scalable path optimization in large-scale multi-agent systems. State-of-the-art anytime MAPF is based on Large Neighborhood Search (LNS), where a fast initial solution is iteratively optimized by destroying and repairing a fixed number of parts, i.e., the neighborhood, of the solution, using randomized destroy heuristics and prioritized planning. Despite their recent success in various MAPF instances, current LNS-based approaches lack exploration and flexibility due to greedy optimization with a fixed neighborhood size which can lead to low quality solutions in general. So far, these limitations have been addressed with extensive prior effort in tuning or offline machine learning beyond actual planning. In this paper, we focus on online learning in LNS and propose Bandit-based Adaptive LArge Neighborhood search Combined with Exploration (BALANCE). BALANCE uses a bi-level multi-armed bandit scheme to adapt the selection of destroy heuristics and neighborhood sizes on the fly during search. We evaluate BALANCE on multiple maps from the MAPF benchmark set and empirically demonstrate cost improvements of at least 50% compared to state-of-the-art anytime MAPF in large-scale scenarios. We find that Thompson Sampling performs particularly well compared to alternative multi-armed bandit algorithms.

Download here

Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning

Published in , 2024

Multi-Agent Path Finding (MAPF) is the challenging problem of finding collision-free paths for multiple agents, which has a wide range of applications, such as automated warehouses, smart manufacturing, and traffic management. Recently, machine learning-based approaches have become popular in addressing MAPF problems in a decentralized and potentially generalizing way. Most learning-based MAPF approaches use reinforcement and imitation learning to train agent policies for decentralized execution under partial observability. However, current state-of-the-art approaches suffer from a prevalent bias to micro-aspects of particular MAPF problems, such as congestions in corridors and potential delays caused by single agents, leading to tight specializations through extensive engineering via oversized models, reward shaping, path finding algorithms, and communication. These specializations are generally detrimental to the sample efficiency, i.e., the learning progress given a certain amount of experience, and generalization to previously unseen scenarios. In contrast, curriculum learning offers an elegant and much simpler way of training agent policies in a step-by-step manner to master all aspects implicitly without extensive engineering. In this paper, we propose a generative curriculum approach to learning-based MAPF using Variational Autoencoder Utilized Learning of Terrains (VAULT). We introduce a two-stage framework to (I) train the VAULT via unsupervised learning to obtain a latent space representation of maps and (II) use the VAULT to generate curricula in order to improve sample efficiency and generalization of learning-based MAPF methods. For the second stage, we propose a bi-level curriculum scheme by combining our VAULT curriculum with a low-level curriculum method to improve sample efficiency further. Our framework is designed in a modular and general way, where each proposed component serves its purpose in a black-box manner without considering specific micro-aspects of the underlying problem. We empirically evaluate our approach in maps of the public MAPF benchmark set as well as novel artificial maps generated with the VAULT. Our results demonstrate the effectiveness of the VAULT as a map generator and our VAULT curriculum in improving sample efficiency and generalization of learning-based MAPF methods compared to alternative approaches. We also demonstrate how data pruning can further reduce the dependence on available maps without affecting the generalization potential of our approach.

Download here

Quantum Circuit Design: A Reinforcement Learning Challenge

Published in , 2024

Quantum computing (QC) in the current NISQ-era is still limited. To gain early insights and advantages, hybrid applications are widely considered mitigating those shortcomings. Hybrid quantum machine learning (QML) comprises both the application of QC to improve machine learning (ML), and the application of ML to improve QC architectures. This work considers the latter, focusing on leveraging reinforcement learning (RL) to improve current QC approaches. We therefore introduce various generic challenges arising from quantum architecture search and quantum circuit optimization that RL algorithms need to solve to provide benefits for more complex applications and combinations of those. Building upon these challenges we propose a concrete framework, formalized as a Markov decision process, to enable to learn policies that are capable of controlling a universal set of quantum gates. Furthermore, we provide benchmark results to assess shortcomings and strengths of current state-of-the-art algorithms.

Download here

Anytime Multi-Agent Path Finding using Operator Parallelism in Large Neighborhood Search

Published in , 2024

Multi-Agent Path Finding (MAPF) is the problem of finding a set of collision-free paths for multiple agents in a shared environment while minimizing the sum of travel times. Since the MAPF problem is NP-hard to solve optimally, anytime algorithms are promising to quickly find a solution and keep optimizing it before interrupting. The current state-of-the-art anytime algorithm for MAPF is based on Large Neighborhood Search (LNS), called MAPF-LNS, which is a combinatorial search algorithm that iteratively destroys and repairs a subset of collision-free paths in order to optimize the sum of travel times. However, the destroy and repair operations in MAPF-LNS can be time-consuming, thus limiting the effectiveness due to fewer iterations and scalability w.r.t. the number of agents. In this paper, we propose Destroy-Repair Operation Parallelism for LNS (DROP-LNS), a parallel framework that performs multiple destroy and repair processes simultaneously to explore a larger searching space under a limited time budget. Unlike MAPF-LNS, DROP-LNS is able to exploit parallelized hardware to improve the solution quality. We extend DROP-LNS to two alternatives and conduct experimental evaluations to compare the performance. The results show that DROP-LNS significantly outperforms the state-of-the-art.

Download here

Confidence-Based Curriculum Learning for Multi-Agent Path Finding

Published in , 2024

A wide range of real-world applications can be formulated as Multi-Agent Path Finding (MAPF) problem, where the goal is to find collision-free paths for multiple agents with individual start and goal locations. State-of-the-art MAPF solvers are mainly centralized and depend on global information, which limits their scalability and flexibility regarding changes or new maps that would require expensive replanning. Multi-agent reinforcement learning (MARL) offers an alternative way by learning decentralized policies that can generalize over a variety of maps. While there exist some prior works that attempt to connect both areas, the proposed techniques are heavily engineered and very complex due to the integration of many mechanisms that limit generality and are expensive to use. We argue that much simpler and general approaches are needed to bring the areas of MARL and MAPF closer together with significantly lower costs. In this paper, we propose Confidence-based Auto-Curriculum for Team Update Stability (CACTUS) as a lightweight MARL approach to MAPF. CACTUS defines a simple reverse curriculum scheme, where the goal of each agent is randomly placed within an allocation radius around the agent’s start location. The allocation radius increases gradually as all agents improve, which is assessed by a confidence-based measure. We evaluate CACTUS in various maps of different sizes, obstacle densities, and numbers of agents. Our experiments demonstrate better performance and generalization capabilities than state-of-the-art approaches while using less than 600,000 trainable parameters, which is less than 5% of the neural network size of current MARL approaches to MAPF.

Download here

Emergent Cooperation from Mutual Acknowledgment Exchange in Multi-Agent Reinforcement Learning

Published in , 2024

Peer incentivization (PI) is a recent approach where all agents learn to reward or penalize each other in a distributed fashion, which often leads to emergent cooperation. Current PI mechanisms implicitly assume a flawless communication channel in order to exchange rewards. These rewards are directly incorporated into the learning process without any chance to respond with feedback. Furthermore, most PI approaches rely on global information, which limits scalability and applicability to real-world scenarios where only local information is accessible. In this paper, we propose Mutual Acknowledgment Token Exchange (MATE), a PI approach defined by a two-phase communication protocol to exchange acknowledgment tokens as incentives to shape individual rewards mutually. All agents condition their token transmissions on the locally estimated quality of their own situations based on environmental rewards and received tokens. MATE is completely decentralized and only requires local communication and information. We evaluate MATE in three social dilemma domains. Our results show that MATE is able to achieve and maintain significantly higher levels of cooperation than previous PI approaches. In addition, we evaluate the robustness of MATE in more realistic scenarios, where agents can deviate from the protocol and communication failures can occur. We also evaluate the sensitivity of MATE w.r.t. the choice of token values.

Download here

Challenges for Reinforcement Learning in Quantum Circuit Design

Published in , 2024

Quantum computing (QC) in the current NISQ era is still limited in size and precision. Hybrid applications mitigating those shortcomings are prevalent to gain early insight and advantages. Hybrid quantum machine learning (QML) comprises both the application of QC to improve machine learning (ML) and ML to improve QC architectures. This work considers the latter, leveraging reinforcement learning (RL) to improve the search for viable quantum architectures, which we formalize by a set of generic challenges. Furthermore, we propose a concrete framework, formalized as a Markov decision process, to enable learning policies capable of controlling a universal set of continuously parameterized quantum gates. Finally, we provide benchmark comparisons to assess the shortcomings and strengths of current state-of-the-art RL algorithms.

Download here

Anytime Multi-Agent Path Finding with an Adaptive Delay-Based Heuristic

Published in , 2025

Anytime multi-agent path finding (MAPF) is a promising approach to scalable and collision-free path optimization in multi-agent systems. MAPF-LNS, based on Large Neighborhood Search (LNS), is the current state-of-the-art approach where a fast initial solution is iteratively optimized by destroying and repairing selected paths of the solution. Current MAPF-LNS variants commonly use an adaptive selection mechanism to choose among multiple destroy heuristics. However, to determine promising destroy heuristics, MAPF-LNS requires a considerable amount of exploration time. As common destroy heuristics are stationary, i.e., non-adaptive, any performance bottleneck caused by them cannot be overcome by adaptive heuristic selection alone, thus limiting the overall effectiveness of MAPF-LNS. In this paper, we propose Adaptive Delay-based Destroy-and-Repair Enhanced with Success-based Self-Learning (ADDRESS) as a single-destroy-heuristic variant of MAPF-LNS. ADDRESS applies restricted Thompson Sampling to the top-K set of the most delayed agents to select a seed agent for adaptive LNS neighborhood generation. We evaluate ADDRESS in multiple maps from the MAPF benchmark set and demonstrate cost improvements by at least 50% in large-scale scenarios with up to a thousand agents, compared with the original MAPF-LNS and other state-of-the-art methods.

Download here

Counterfactual Online Learning for Open-Loop Monte-Carlo Planning

Published in , 2025

Monte-Carlo Tree Search (MCTS) is a popular approach to online planning under uncertainty. While MCTS uses statistical sampling via multi-armed bandits to avoid exhaustive search in complex domains, common closed-loop approaches typically construct enormous search trees to consider a large number of potential observations and actions. On the other hand, open-loop approaches offer better memory efficiency by ignoring observations but are generally not competitive with closed-loop MCTS in terms of performance – even with commonly integrated human knowledge. In this paper, we propose Counterfactual Open-loop Reasoning with Ad hoc Learning (CORAL) for open-loop MCTS, using a causal multi-armed bandit approach with unobserved confounders (MABUC). CORAL consists of two online learning phases that are conducted during the open-loop search. In the first phase, an intent policy is learned based on preferred actions. In the second phase, a counterfactual policy is learned with MABUCs to make a final decision using the previously learned intent policy. We evaluate CORAL in four POMDP benchmark scenarios and compare it with closed-loop and open-loop alternatives. In contrast to standard open-loop MCTS, CORAL achieves competitive performance compared with closed-loop algorithms while constructing significantly smaller search trees.

Download here

Truncated Counterfactual Learning for Anytime Multi-Agent Path Finding

Published in , 2026

Anytime multi-agent path finding (MAPF) is a promising approach to scalable and collision-free path optimization in multi-agent systems. MAPF-LNS, based on Large Neighborhood Search (LNS), is the current state-of-the-art approach where a fast initial solution is iteratively optimized by destroying and repairing selected paths, i.e., a neighborhood, of the solution. Delay-based MAPF-LNS has demonstrated particular effectiveness in generating promising neighborhoods via seed agents, according to their delays. Seed agents are selected using handcrafted strategies or online learning, where the former relies on human intuition about underlying structures, while the latter conducts black-box optimization, ignoring any structure. In this paper, we propose Truncated Adaptive Counterfactual K-ranked LEarning (TACKLE) to select seed agents via informed online learning by leveraging handcrafted strategies as human intuition. We show theoretically that TACKLE dominates its handcrafted and black-box learning counterparts in the limit. Our experiments demonstrate cost improvements of at least 60% in instances with one thousand agents, compared with state-of-the-art anytime solvers.

Download here

Spatially Grouped Curriculum Learning for Multi-Agent Path Finding

Published in , 2026

Multi-agent path finding (MAPF) is the challenging problem of finding conflict-free paths with minimal costs for multiple agents. While traditional MAPF solvers are centralized using heuristic search, reinforcement learning (RL) is becoming increasingly popular due to its potential to learn decentralized and generalizing policies. RL-based MAPF must cope with spatial coordination, which is often addressed by combining independent training with ad hoc measures like replanning and communication. Such ad hoc measures often complicate the approach and require knowledge beyond the actual accessible information in RL, such as the full map occupation or broadcast communication channels, which limits generalizability, effectiveness, and sample efficiency. In this paper, we propose Partitioned Attention-based Reverse Curricula for Enhanced Learning (PARCEL), considering a bounding region for each agent. PARCEL trains all agents with overlapping regions jointly via self-attention to avoid potential conflicts. By employing a reverse curriculum, where the bounding regions grow as the policies improve, all agents will eventually merge into a single coordinated group. We evaluate PARCEL in two simple coordination tasks and four MAPF benchmark maps. Compared with state-of-the-art RL-based MAPF methods, PARCEL demonstrates better effectiveness and sample efficiency without ad hoc measures.

Download here

research

talks

Talk 1 on Relevant Topic in Your Field

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Conference Proceeding talk 3 on Relevant Topic in Your Field

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

teaching

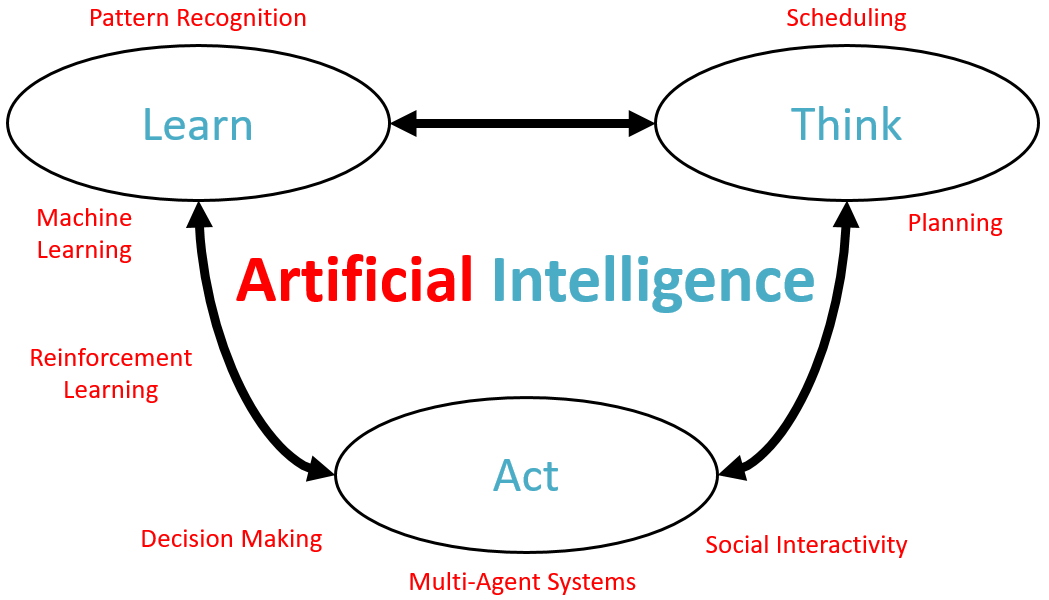

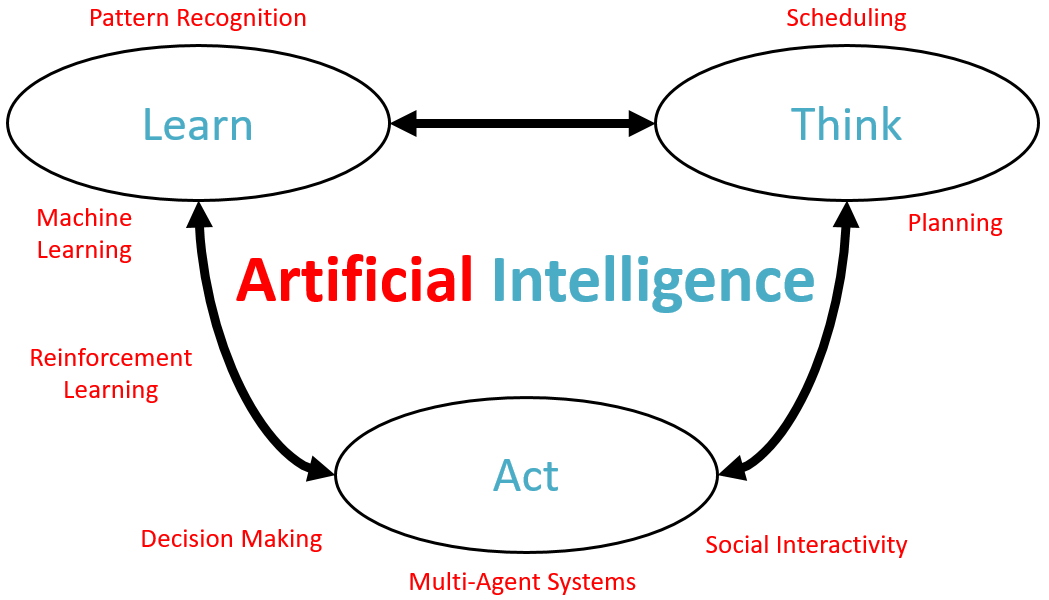

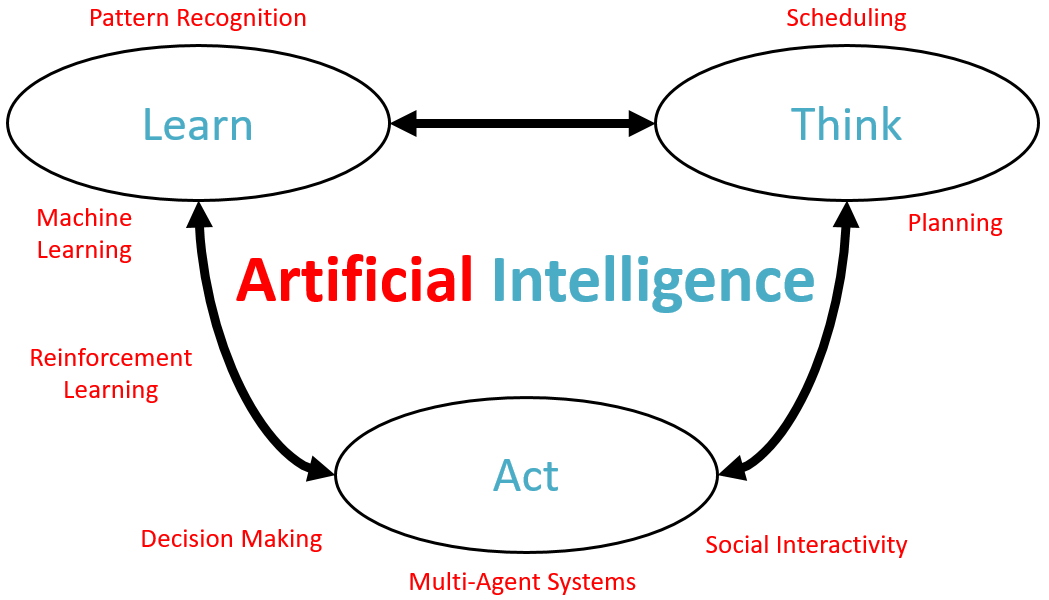

Artificial Intelligence

, , 1900

CSCI 599: Autonomous Decision-Making

, , 1900

Autonomous Systems

, , 1900

Coding Sessions

, , 1900

Directed Research

, , 1900

Mobile and Distributed Systems

, , 1900

Student Theses

, , 1900