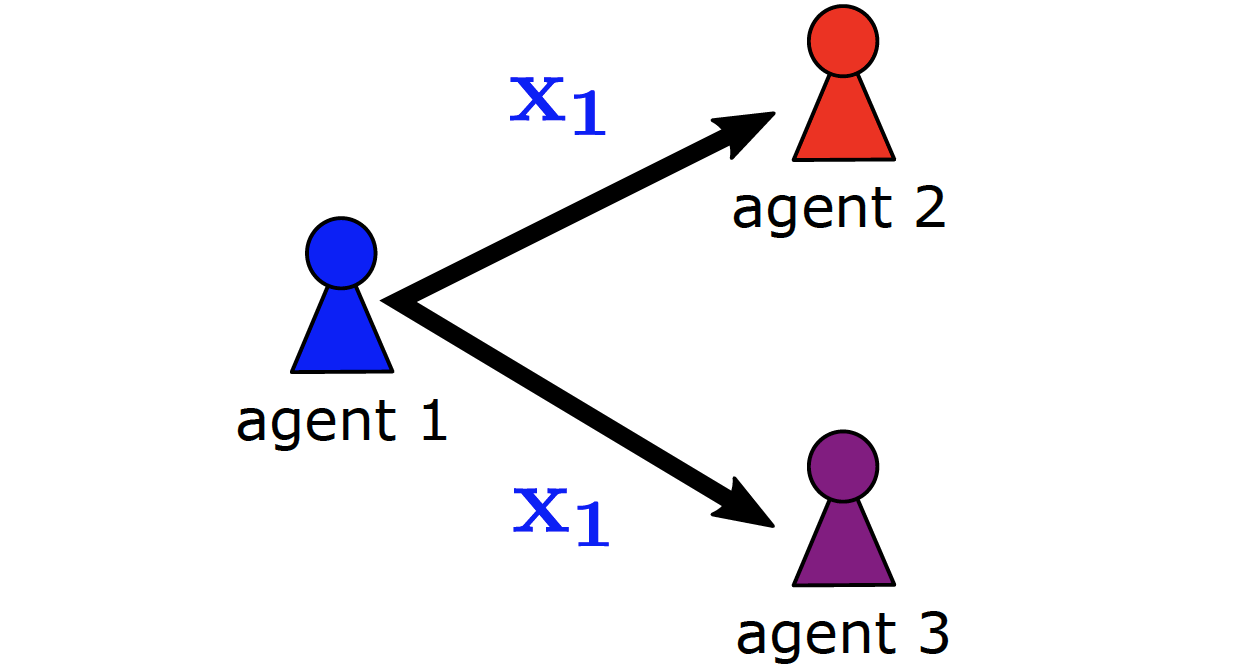

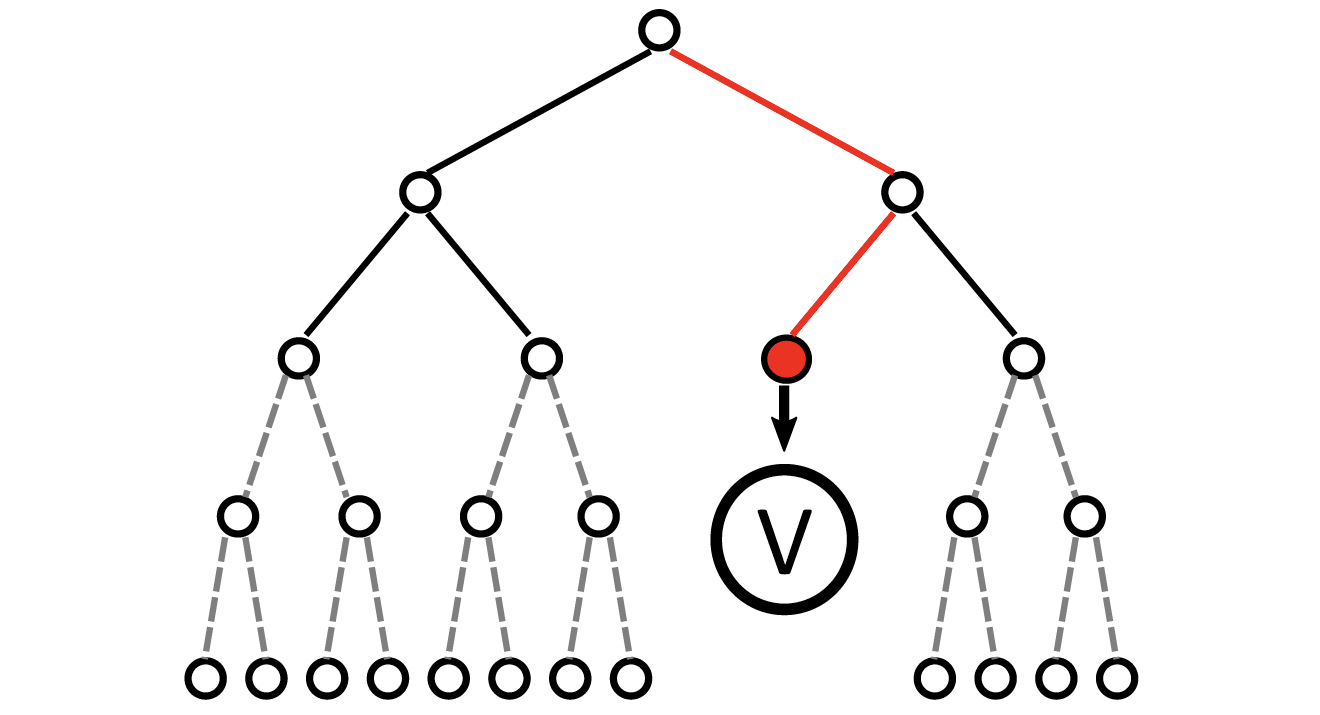

Multi-agent path finding (MAPF) is the challenging problem of finding conflict-free paths with minimal costs for multiple agents. While traditional MAPF solvers are centralized using heuristic search, reinforcement learning (RL) is becoming increasingly popular due to its potential to learn decentralized and generalizing policies. RL-based MAPF must cope with spatial coordination, which is often addressed by combining independent training with ad hoc measures like replanning and communication. Such ad hoc measures often complicate the approach and require knowledge beyond the actual accessible information in RL, such as the full map occupation or broadcast communication channels, which limits generalizability, effectiveness, and sample efficiency. In this paper, we propose Partitioned Attention-based Reverse Curricula for Enhanced Learning (PARCEL), considering a bounding region for each agent. PARCEL trains all agents with overlapping regions jointly via self-attention to avoid potential conflicts. By employing a reverse curriculum, where the bounding regions grow as the policies improve, all agents will eventually merge into a single coordinated group. We evaluate PARCEL in two simple coordination tasks and four MAPF benchmark maps. Compared with state-of-the-art RL-based MAPF methods, PARCEL demonstrates better effectiveness and sample efficiency without ad hoc measures.

@article{ phan2AAAI26,

author = "Thomy Phan and Sven Koenig",

title = "Spatially Grouped Curriculum Learning for Multi-Agent Path Finding",

year = "2026",

abstract = "Multi-agent path finding (MAPF) is the challenging problem of finding conflict-free paths with minimal costs for multiple agents. While traditional MAPF solvers are centralized using heuristic search, reinforcement learning (RL) is becoming increasingly popular due to its potential to learn decentralized and generalizing policies. RL-based MAPF must cope with spatial coordination, which is often addressed by combining independent training with ad hoc measures like replanning and communication. Such ad hoc measures often complicate the approach and require knowledge beyond the actual accessible information in RL, such as the full map occupation or broadcast communication channels, which limits generalizability, effectiveness, and sample efficiency. In this paper, we propose Partitioned Attention-based Reverse Curricula for Enhanced Learning (PARCEL), considering a bounding region for each agent. PARCEL trains all agents with overlapping regions jointly via self-attention to avoid potential conflicts. By employing a reverse curriculum, where the bounding regions grow as the policies improve, all agents will eventually merge into a single coordinated group. We evaluate PARCEL in two simple coordination tasks and four MAPF benchmark maps. Compared with state-of-the-art RL-based MAPF methods, PARCEL demonstrates better effectiveness and sample efficiency without ad hoc measures.",

url = "https://thomyphan.github.io/files/2026-aaai-preprint2.pdf",

eprint = "https://thomyphan.github.io/files/2026-aaai-preprint2.pdf",

location = "Singapore",

journal = "Proceedings of the AAAI Conference on Artificial Intelligence",

}

Related Articles

- T. Phan et al., “Truncated Counterfactual Learning for Anytime Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- T. Phan et al., “Anytime Multi-Agent Path Finding with an Adaptive Delay-Based Heuristic”, AAAI 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- S. Chan et al., “Anytime Multi-Agent Path Finding using Operator Parallelism in Large Neighborhood Search”, AAMAS 2024

- T. Phan et al., “Adaptive Anytime Multi-Agent Path Finding using Bandit-Based Large Neighborhood Search”, AAAI 2024

- P. Altmann et al., “CROP: Towards Distributional-Shift Robust Reinforcement Learning using Compact Reshaped Observation Processing”, IJCAI 2023

- T. Phan et al., “Attention-Based Recurrence for Multi-Agent Reinforcement Learning under Stochastic Partial Observability”, ICML 2023

- T. Phan et al., “VAST: Value Function Factorization with Variable Agent Sub-Teams”, NeurIPS 2021

- T. Gabor et al., “The Scenario Coevolution Paradigm: Adaptive Quality Assurance for Adaptive Systems”, STTT 2020

- T. Phan et al., “A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation”, ALA 2020

- T. Gabor et al., “Scenario Co-Evolution for Reinforcement Learning on a Grid World Smart Factory Domain”, GECCO 2019

- T. Phan et al., “Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies”, AAMAS 2019

- T. Phan et al., “Leveraging Statistical Multi-Agent Online Planning with Emergent Value Function Approximation”, AAMAS 2018

Relevant Research Areas