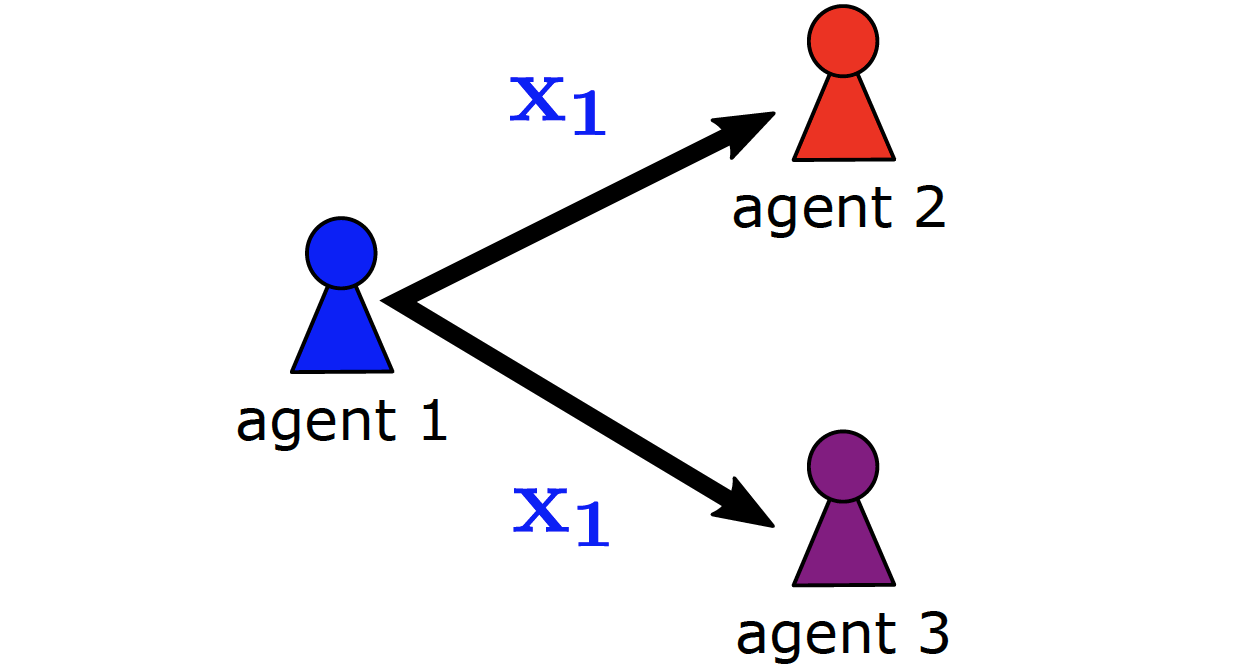

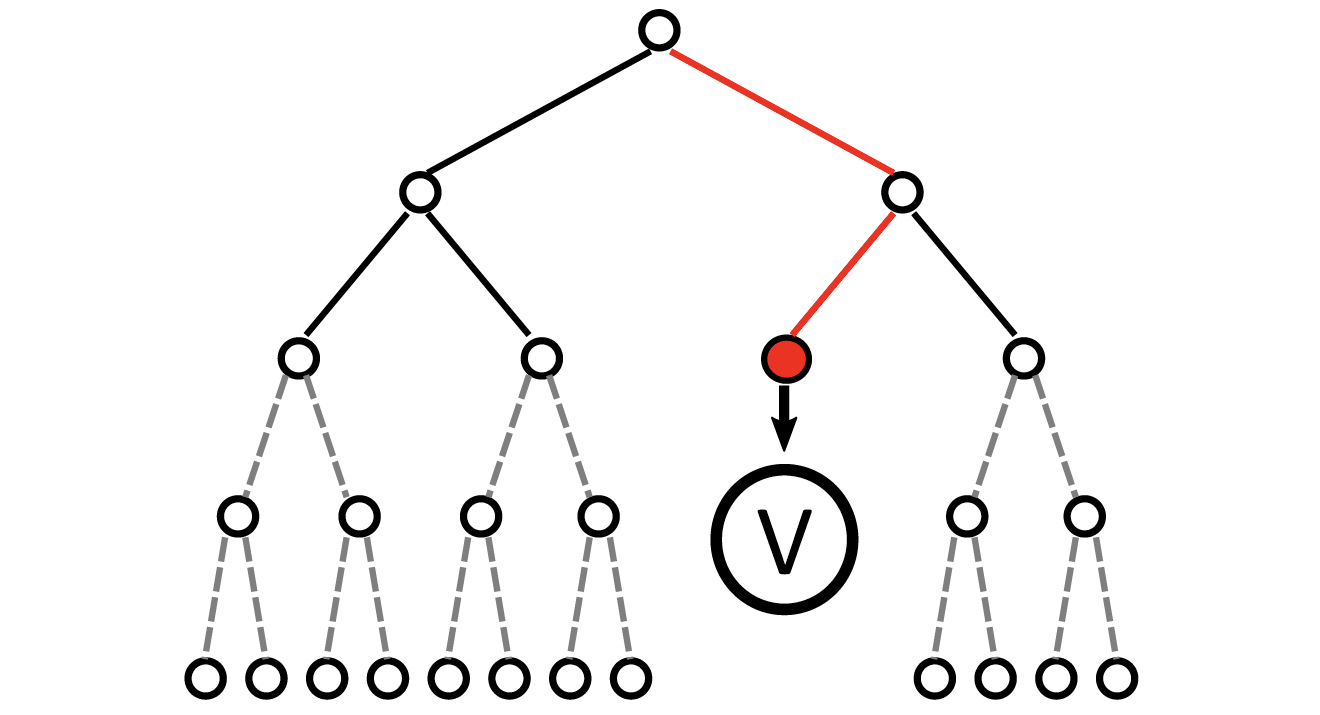

Making decisions is a great challenge in distributed autonomous environments due to enormous state spaces and uncertainty. Many online planning algorithms rely on statistical sampling to avoid searching the whole state space, while still being able to make acceptable decisions. However, planning often has to be performed under strict computational constraints making online planning in multi-agent systems highly limited, which could lead to poor system performance, especially in stochastic domains. In this paper, we propose Emergent Value function Approximation for Distributed Environments (EVADE), an approach to integrate global experience into multi-agent online planning in stochastic domains to consider global effects during local planning. For this purpose, a value function is approximated online based on the emergent system behaviour by using methods of reinforcement learning. We empirically evaluated EVADE with two statistical multi-agent online planning algorithms in a highly complex and stochastic smart factory environment, where multiple agents need to process various items at a shared set of machines. Our experiments show that EVADE can effectively improve the performance of multi-agent online planning while offering efficiency w.r.t. the breadth and depth of the planning process.

@inproceedings{ phanAAMAS18,

author = "Thomy Phan and Lenz Belzner and Thomas Gabor and Kyrill Schmid",

title = "Leveraging Statistical Multi-Agent Online Planning with Emergent Value Function Approximation",

year = "2018",

abstract = "Making decisions is a great challenge in distributed autonomous environments due to enormous state spaces and uncertainty. Many online planning algorithms rely on statistical sampling to avoid searching the whole state space, while still being able to make acceptable decisions. However, planning often has to be performed under strict computational constraints making online planning in multi-agent systems highly limited, which could lead to poor system performance, especially in stochastic domains. In this paper, we propose Emergent Value function Approximation for Distributed Environments (EVADE), an approach to integrate global experience into multi-agent online planning in stochastic domains to consider global effects during local planning. For this purpose, a value function is approximated online based on the emergent system behaviour by using methods of reinforcement learning. We empirically evaluated EVADE with two statistical multi-agent online planning algorithms in a highly complex and stochastic smart factory environment, where multiple agents need to process various items at a shared set of machines. Our experiments show that EVADE can effectively improve the performance of multi-agent online planning while offering efficiency w.r.t. the breadth and depth of the planning process.",

url = "https://ifaamas.org/Proceedings/aamas2018/pdfs/p730.pdf",

eprint = "https://thomyphan.github.io/files/2018-aamas.pdf",

location = "Stockholm, Sweden",

publisher = "International Foundation for Autonomous Agents and Multiagent Systems",

booktitle = "Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems",

pages = "730--738",

keywords = "online planning, value function approximation, multi-agent planning",

doi = "https://dl.acm.org/doi/abs/10.5555/3237383.3237491"

}

Related Articles

- T. Phan et al., “Spatially Grouped Curriculum Learning for Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- T. Phan et al., “A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation”, ALA 2020

- T. Phan et al., “Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies”, AAMAS 2019

- T. Phan, “Emergence and Resilience in Multi-Agent Reinforcement Learning”, PhD Thesis

Relevant Research Areas