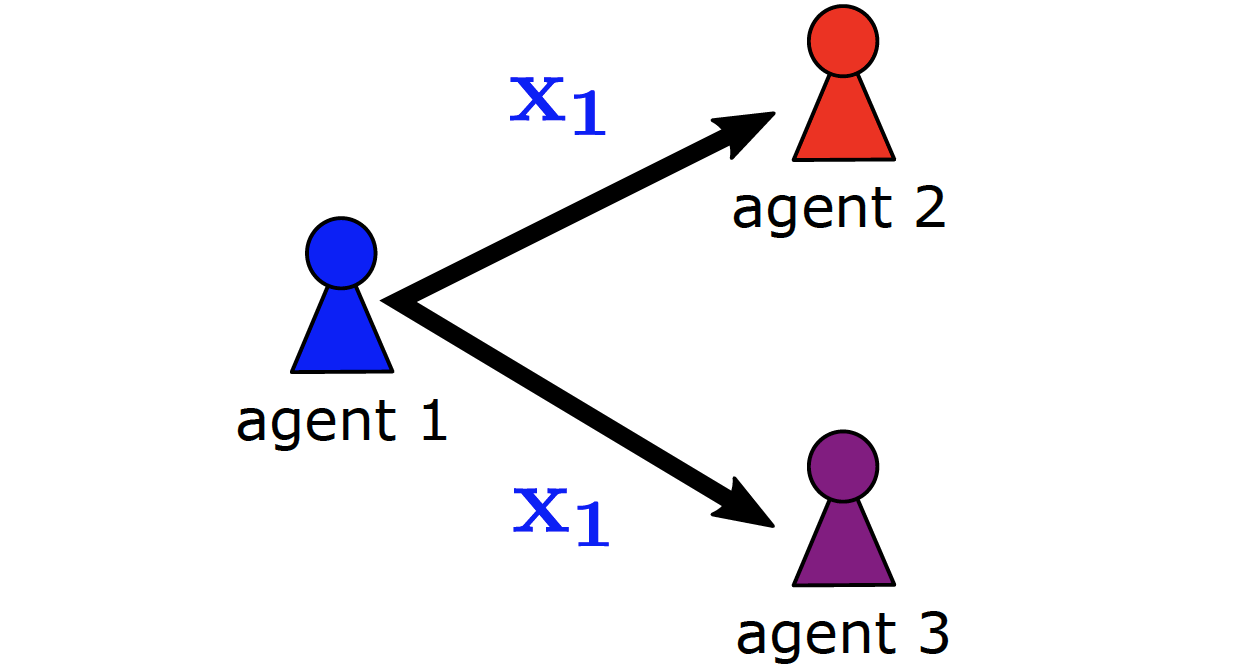

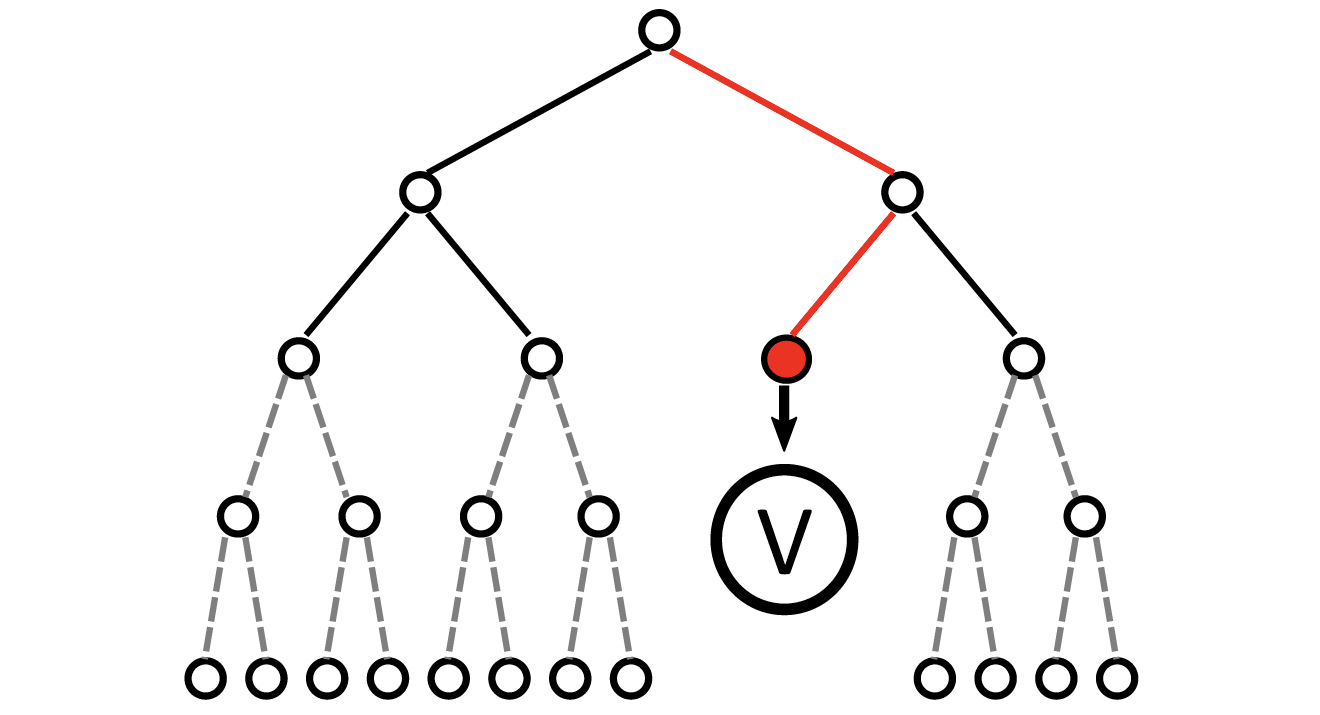

We propose Strong Emergent Policy (STEP) approximation, a scalable approach to learn strong decentralized policies for cooperative MAS with a distributed variant of policy iteration. For that, we use function approximation to learn from action recommendations of a decentralized multi-agent planning algorithm. STEP combines decentralized multi-agent planning with centralized learning, only requiring a generative model for distributed black box optimization. We experimentally evaluate STEP in two challenging and stochastic domains with large state and joint action spaces and show that STEP is able to learn stronger policies than standard multi-agent reinforcement learning algorithms, when combining multi-agent open-loop planning with centralized function approximation. The learned policies can be reintegrated into the multi-agent planning process to further improve performance.

@inproceedings{ phanAAMAS19,

author = "Thomy Phan and Kyrill Schmid and Lenz Belzner and Thomas Gabor and Sebastian Feld and Claudia Linnhoff-Popien",

title = "Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies",

year = "2019",

abstract = "We propose Strong Emergent Policy (STEP) approximation, a scalable approach to learn strong decentralized policies for cooperative MAS with a distributed variant of policy iteration. For that, we use function approximation to learn from action recommendations of a decentralized multi-agent planning algorithm. STEP combines decentralized multi-agent planning with centralized learning, only requiring a generative model for distributed black box optimization. We experimentally evaluate STEP in two challenging and stochastic domains with large state and joint action spaces and show that STEP is able to learn stronger policies than standard multi-agent reinforcement learning algorithms, when combining multi-agent open-loop planning with centralized function approximation. The learned policies can be reintegrated into the multi-agent planning process to further improve performance.",

url = "https://arxiv.org/pdf/1901.08761.pdf",

eprint = "https://thomyphan.github.io/files/2019-aamas-ea.pdf",

location = "Montreal QC, Canada",

publisher = "International Foundation for Autonomous Agents and Multiagent Systems",

booktitle = "Extended Abstracts of the 18th International Conference on Autonomous Agents and MultiAgent Systems",

pages = "2162--2164",

keywords = "multi-agent learning, policy iteration, multi-agent planning",

doi = "https://dl.acm.org/doi/10.5555/3306127.3332044"

}

Related Articles

- T. Phan et al., “Spatially Grouped Curriculum Learning for Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- T. Phan et al., “A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation”, ALA 2020

- T. Phan et al., “Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning”, IJCAI 2019

- T. Phan et al., “Memory Bounded Open-Loop Planning in Large POMDPs Using Thompson Sampling”, AAAI 2019

- T. Phan et al., “Leveraging Statistical Multi-Agent Online Planning with Emergent Value Function Approximation”, AAMAS 2018

- T. Phan, “Emergence and Resilience in Multi-Agent Reinforcement Learning”, PhD Thesis

Relevant Research Areas