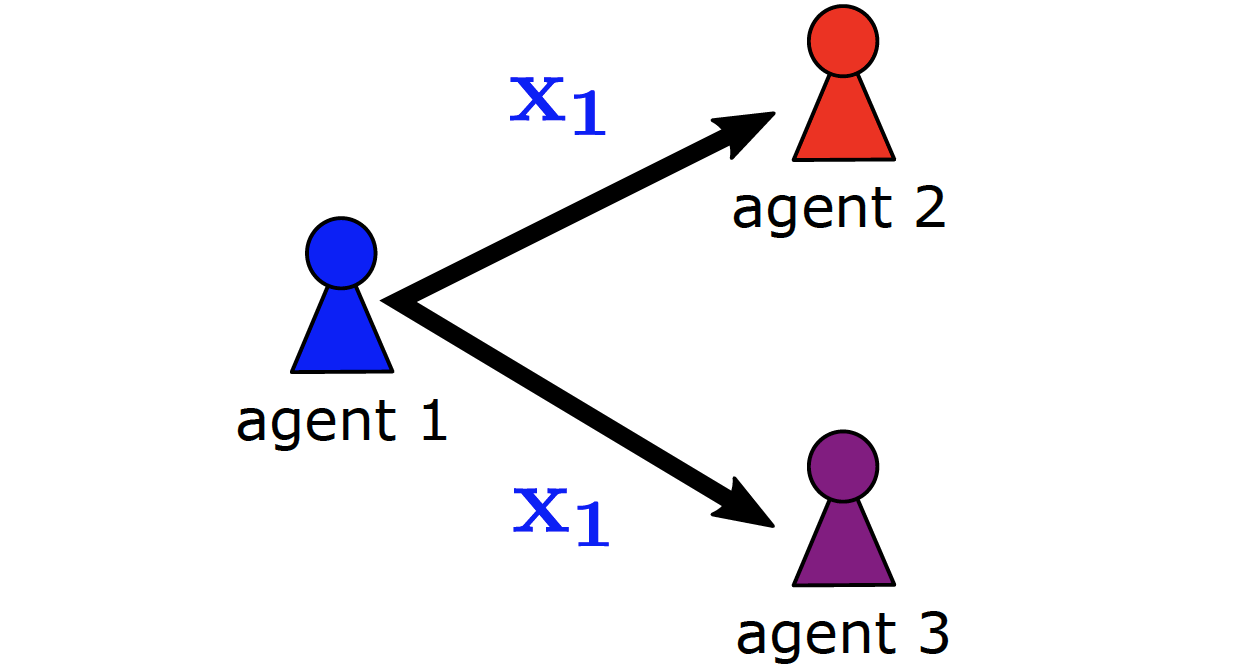

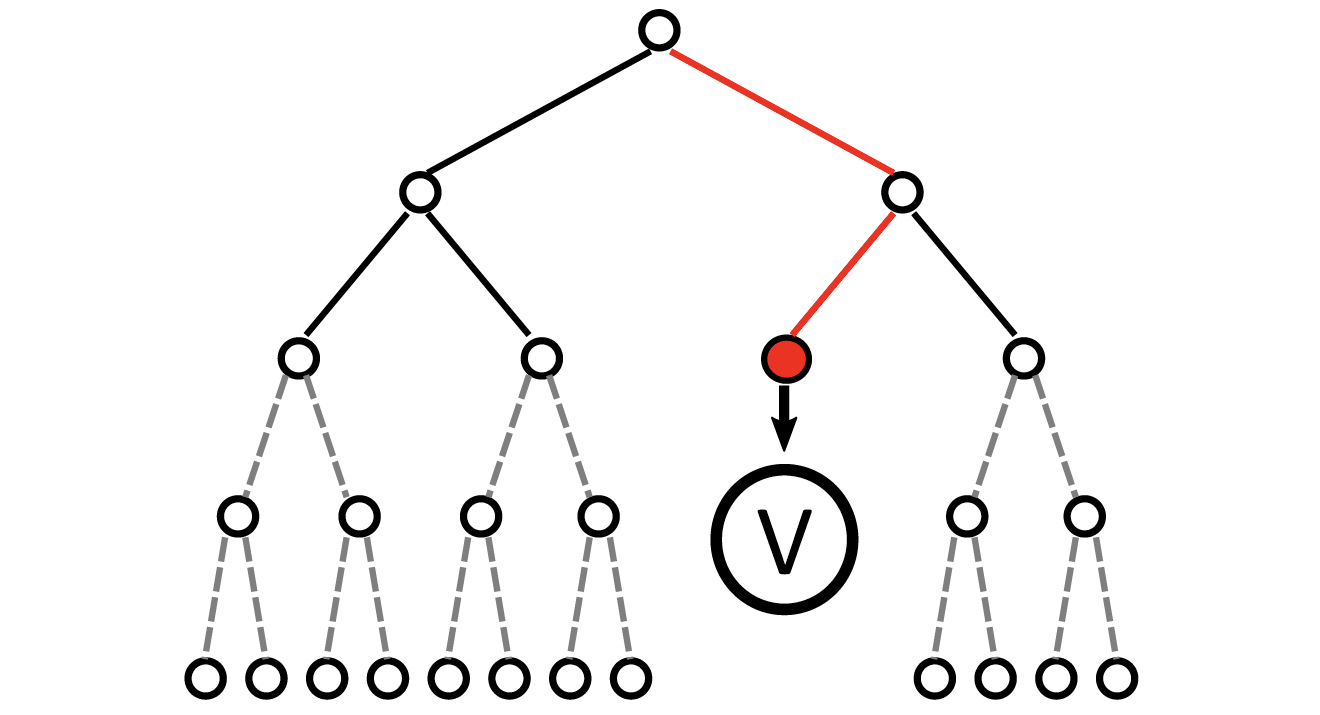

Multi-Agent Path Finding (MAPF) is the challenging problem of finding collision-free paths for multiple agents, which has a wide range of applications, such as automated warehouses, smart manufacturing, and traffic management. Recently, machine learning-based approaches have become popular in addressing MAPF problems in a decentralized and potentially generalizing way. Most learning-based MAPF approaches use reinforcement and imitation learning to train agent policies for decentralized execution under partial observability. However, current state-of-the-art approaches suffer from a prevalent bias to micro-aspects of particular MAPF problems, such as congestions in corridors and potential delays caused by single agents, leading to tight specializations through extensive engineering via oversized models, reward shaping, path finding algorithms, and communication. These specializations are generally detrimental to the sample efficiency, i.e., the learning progress given a certain amount of experience, and generalization to previously unseen scenarios. In contrast, curriculum learning offers an elegant and much simpler way of training agent policies in a step-by-step manner to master all aspects implicitly without extensive engineering. In this paper, we propose a generative curriculum approach to learning-based MAPF using Variational Autoencoder Utilized Learning of Terrains (VAULT). We introduce a two-stage framework to (I) train the VAULT via unsupervised learning to obtain a latent space representation of maps and (II) use the VAULT to generate curricula in order to improve sample efficiency and generalization of learning-based MAPF methods. For the second stage, we propose a bi-level curriculum scheme by combining our VAULT curriculum with a low-level curriculum method to improve sample efficiency further. Our framework is designed in a modular and general way, where each proposed component serves its purpose in a black-box manner without considering specific micro-aspects of the underlying problem. We empirically evaluate our approach in maps of the public MAPF benchmark set as well as novel artificial maps generated with the VAULT. Our results demonstrate the effectiveness of the VAULT as a map generator and our VAULT curriculum in improving sample efficiency and generalization of learning-based MAPF methods compared to alternative approaches. We also demonstrate how data pruning can further reduce the dependence on available maps without affecting the generalization potential of our approach.

@article{ phanJAIR25,

author = "Thomy Phan and Timy Phan and Sven Koenig",

title = "Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning",

year = "2024",

abstract = "Multi-Agent Path Finding (MAPF) is the challenging problem of finding collision-free paths for multiple agents, which has a wide range of applications, such as automated warehouses, smart manufacturing, and traffic management. Recently, machine learning-based approaches have become popular in addressing MAPF problems in a decentralized and potentially generalizing way. Most learning-based MAPF approaches use reinforcement and imitation learning to train agent policies for decentralized execution under partial observability. However, current state-of-the-art approaches suffer from a prevalent bias to micro-aspects of particular MAPF problems, such as congestions in corridors and potential delays caused by single agents, leading to tight specializations through extensive engineering via oversized models, reward shaping, path finding algorithms, and communication. These specializations are generally detrimental to the sample efficiency, i.e., the learning progress given a certain amount of experience, and generalization to previously unseen scenarios. In contrast, curriculum learning offers an elegant and much simpler way of training agent policies in a step-by-step manner to master all aspects implicitly without extensive engineering. In this paper, we propose a generative curriculum approach to learning-based MAPF using Variational Autoencoder Utilized Learning of Terrains (VAULT). We introduce a two-stage framework to (I) train the VAULT via unsupervised learning to obtain a latent space representation of maps and (II) use the VAULT to generate curricula in order to improve sample efficiency and generalization of learning-based MAPF methods. For the second stage, we propose a bi-level curriculum scheme by combining our VAULT curriculum with a low-level curriculum method to improve sample efficiency further. Our framework is designed in a modular and general way, where each proposed component serves its purpose in a black-box manner without considering specific micro-aspects of the underlying problem. We empirically evaluate our approach in maps of the public MAPF benchmark set as well as novel artificial maps generated with the VAULT. Our results demonstrate the effectiveness of the VAULT as a map generator and our VAULT curriculum in improving sample efficiency and generalization of learning-based MAPF methods compared to alternative approaches. We also demonstrate how data pruning can further reduce the dependence on available maps without affecting the generalization potential of our approach.",

url = "https://thomyphan.github.io/files/2025-jair.pdf",

eprint = "https://thomyphan.github.io/files/2025-jair.pdf",

journal = "Journal of Artificial Intelligence Research",

pages = "2471--2534",

doi = "https://doi.org/10.1613/jair.1.17403"

}

Related Articles

- T. Phan et al., “Truncated Counterfactual Learning for Anytime Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Anytime Multi-Agent Path Finding with an Adaptive Delay-Based Heuristic”, AAAI 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- S. Chan et al., “Anytime Multi-Agent Path Finding using Operator Parallelism in Large Neighborhood Search”, AAMAS 2024

- T. Phan et al., “Adaptive Anytime Multi-Agent Path Finding using Bandit-Based Large Neighborhood Search”, AAAI 2024

- P. Altmann et al., “CROP: Towards Distributional-Shift Robust Reinforcement Learning using Compact Reshaped Observation Processing”, IJCAI 2023

- T. Phan et al., “Attention-Based Recurrence for Multi-Agent Reinforcement Learning under Stochastic Partial Observability”, ICML 2023

- T. Phan et al., “VAST: Value Function Factorization with Variable Agent Sub-Teams”, NeurIPS 2021

- T. Gabor et al., “The Scenario Coevolution Paradigm: Adaptive Quality Assurance for Adaptive Systems”, STTT 2020

- T. Phan et al., “A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation”, ALA 2020

- T. Gabor et al., “Scenario Co-Evolution for Reinforcement Learning on a Grid World Smart Factory Domain”, GECCO 2019

- T. Phan et al., “Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies”, AAMAS 2019

- T. Phan et al., “Leveraging Statistical Multi-Agent Online Planning with Emergent Value Function Approximation”, AAMAS 2018

Relevant Research Areas