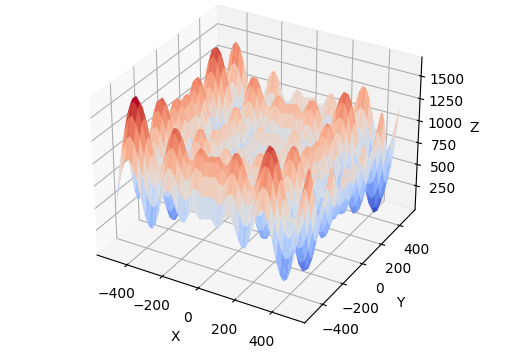

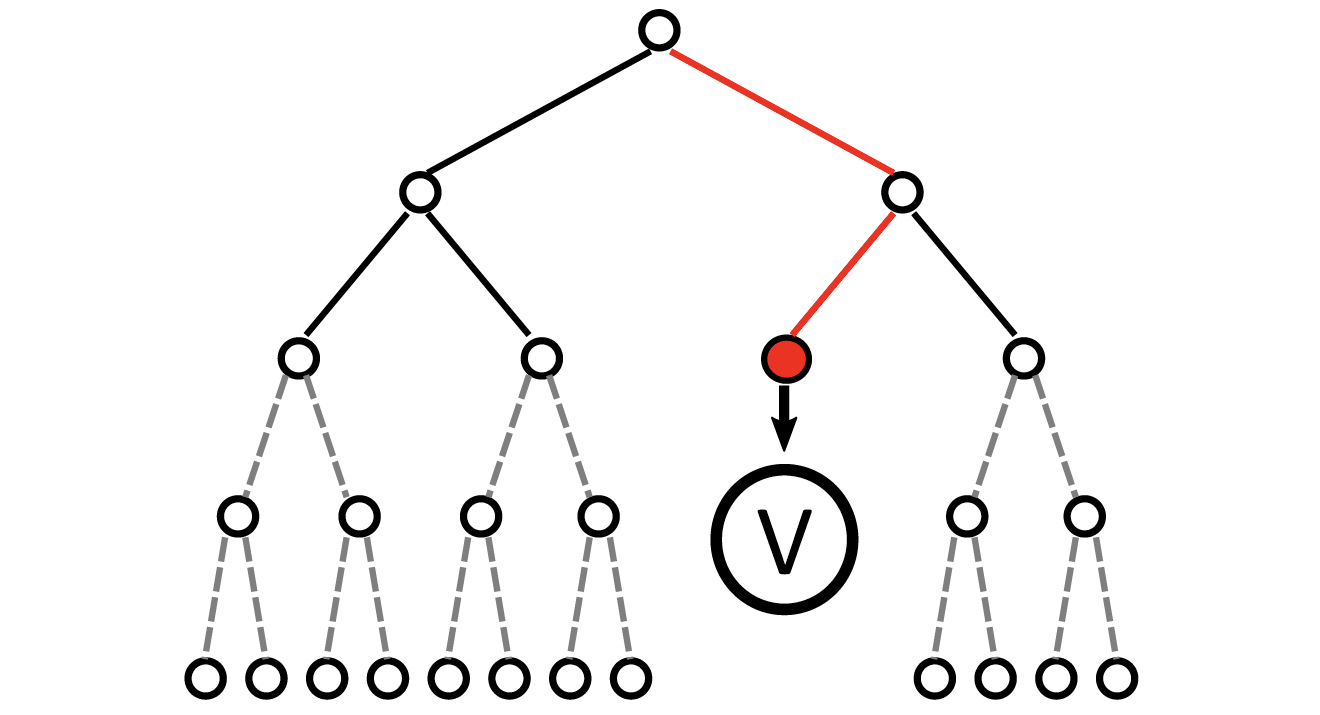

Anytime multi-agent path finding (MAPF) is a promising approach to scalable path optimization in large-scale multi-agent systems. State-of-the-art anytime MAPF is based on Large Neighborhood Search (LNS), where a fast initial solution is iteratively optimized by destroying and repairing a fixed number of parts, i.e., the neighborhood, of the solution, using randomized destroy heuristics and prioritized planning. Despite their recent success in various MAPF instances, current LNS-based approaches lack exploration and flexibility due to greedy optimization with a fixed neighborhood size which can lead to low quality solutions in general. So far, these limitations have been addressed with extensive prior effort in tuning or offline machine learning beyond actual planning. In this paper, we focus on online learning in LNS and propose Bandit-based Adaptive LArge Neighborhood search Combined with Exploration (BALANCE). BALANCE uses a bi-level multi-armed bandit scheme to adapt the selection of destroy heuristics and neighborhood sizes on the fly during search. We evaluate BALANCE on multiple maps from the MAPF benchmark set and empirically demonstrate cost improvements of at least 50% compared to state-of-the-art anytime MAPF in large-scale scenarios. We find that Thompson Sampling performs particularly well compared to alternative multi-armed bandit algorithms.

@article{ phanAAAI24,

author = "Thomy Phan and Taoan Huang and Bistra Dilkina and Sven Koenig",

title = "Adaptive Anytime Multi-Agent Path Finding Using Bandit-Based Large Neighborhood Search",

year = "2024",

abstract = "Anytime multi-agent path finding (MAPF) is a promising approach to scalable path optimization in large-scale multi-agent systems. State-of-the-art anytime MAPF is based on Large Neighborhood Search (LNS), where a fast initial solution is iteratively optimized by destroying and repairing a fixed number of parts, i.e., the neighborhood, of the solution, using randomized destroy heuristics and prioritized planning. Despite their recent success in various MAPF instances, current LNS-based approaches lack exploration and flexibility due to greedy optimization with a fixed neighborhood size which can lead to low quality solutions in general. So far, these limitations have been addressed with extensive prior effort in tuning or offline machine learning beyond actual planning. In this paper, we focus on online learning in LNS and propose Bandit-based Adaptive LArge Neighborhood search Combined with Exploration (BALANCE). BALANCE uses a bi-level multi-armed bandit scheme to adapt the selection of destroy heuristics and neighborhood sizes on the fly during search. We evaluate BALANCE on multiple maps from the MAPF benchmark set and empirically demonstrate cost improvements of at least 50% compared to state-of-the-art anytime MAPF in large-scale scenarios. We find that Thompson Sampling performs particularly well compared to alternative multi-armed bandit algorithms.",

url = "https://thomyphan.github.io/files/2024-aaai-preprint.pdf",

eprint = "https://arxiv.org/pdf/2312.16767.pdf",

journal = "Proceedings of the AAAI Conference on Artificial Intelligence",

pages = "17514--17522",

doi = "https://doi.org/10.1609/aaai.v38i16.29701"

}

Related Articles

- T. Phan et al., “Truncated Counterfactual Learning for Anytime Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Spatially Grouped Curriculum Learning for Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- T. Phan et al., “Counterfactual Online Learning for Open-Loop Monte-Carlo Planning”, AAAI 2025

- T. Phan et al., “Anytime Multi-Agent Path Finding with an Adaptive Delay-Based Heuristic”, AAAI 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- S. Chan et al., “Anytime Multi-Agent Path Finding using Operator Parallelism in Large Neighborhood Search”, AAMAS 2024

- T. Phan et al., “Resilient Multi-Agent Reinforcement Learning with Adversarial Value Decomposition”, AAAI 2021

- T. Phan et al., “Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning”, IJCAI 2019

- T. Phan et al., “Memory Bounded Open-Loop Planning in Large POMDPs Using Thompson Sampling”, AAAI 2019

- T. Gabor et al., “Subgoal-Based Temporal Abstraction in Monte-Carlo Tree Search”, IJCAI 2019

Relevant Research Areas