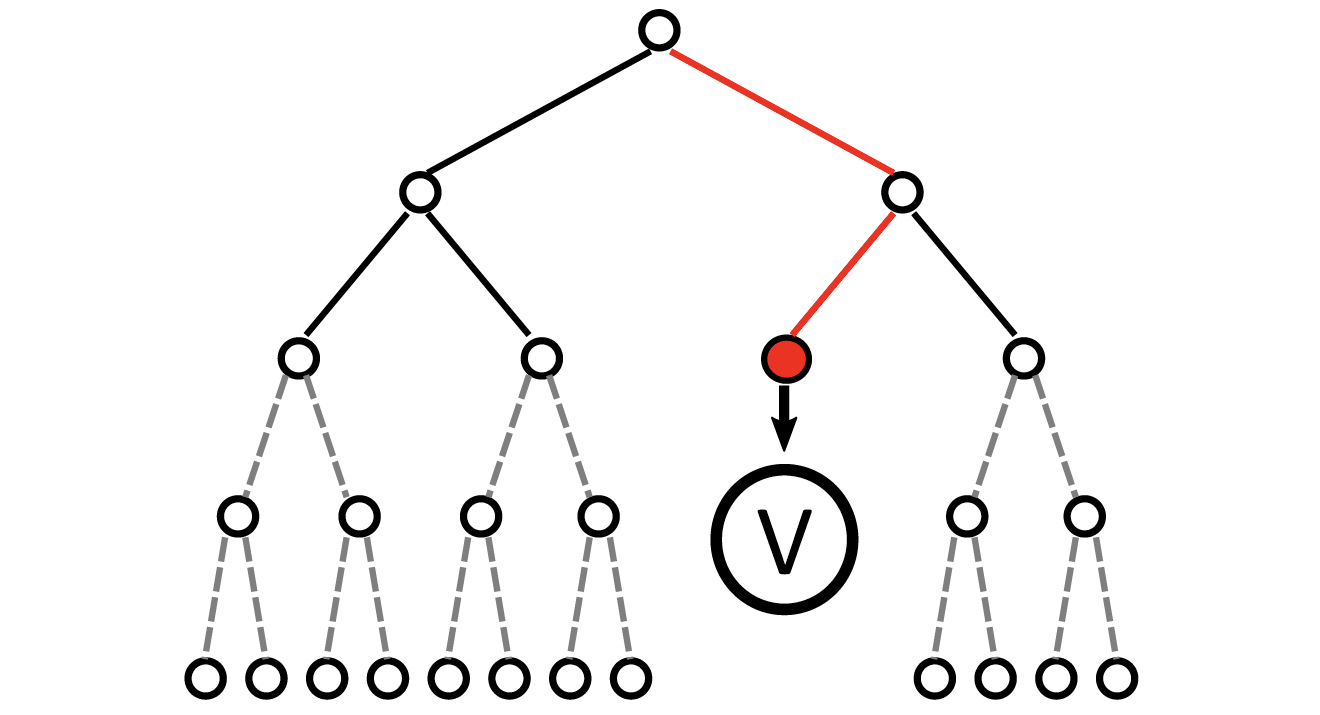

Monte-Carlo Tree Search (MCTS) is a popular approach to online planning under uncertainty. While MCTS uses statistical sampling via multi-armed bandits to avoid exhaustive search in complex domains, common closed-loop approaches typically construct enormous search trees to consider a large number of potential observations and actions. On the other hand, open-loop approaches offer better memory efficiency by ignoring observations but are generally not competitive with closed-loop MCTS in terms of performance -- even with commonly integrated human knowledge. In this paper, we propose Counterfactual Open-loop Reasoning with Ad hoc Learning (CORAL) for open-loop MCTS, using a causal multi-armed bandit approach with unobserved confounders (MABUC). CORAL consists of two online learning phases that are conducted during the open-loop search. In the first phase, an intent policy is learned based on preferred actions. In the second phase, a counterfactual policy is learned with MABUCs to make a final decision using the previously learned intent policy. We evaluate CORAL in four POMDP benchmark scenarios and compare it with closed-loop and open-loop alternatives. In contrast to standard open-loop MCTS, CORAL achieves competitive performance compared with closed-loop algorithms while constructing significantly smaller search trees.

@article{ phan2AAAI25,

author = "Thomy Phan and Shao-Hung Chan and Sven Koenig",

title = "Counterfactual Online Learning for Open-Loop Monte-Carlo Planning",

year = "2025",

abstract = "Monte-Carlo Tree Search (MCTS) is a popular approach to online planning under uncertainty. While MCTS uses statistical sampling via multi-armed bandits to avoid exhaustive search in complex domains, common closed-loop approaches typically construct enormous search trees to consider a large number of potential observations and actions. On the other hand, open-loop approaches offer better memory efficiency by ignoring observations but are generally not competitive with closed-loop MCTS in terms of performance -- even with commonly integrated human knowledge. In this paper, we propose Counterfactual Open-loop Reasoning with Ad hoc Learning (CORAL) for open-loop MCTS, using a causal multi-armed bandit approach with unobserved confounders (MABUC). CORAL consists of two online learning phases that are conducted during the open-loop search. In the first phase, an intent policy is learned based on preferred actions. In the second phase, a counterfactual policy is learned with MABUCs to make a final decision using the previously learned intent policy. We evaluate CORAL in four POMDP benchmark scenarios and compare it with closed-loop and open-loop alternatives. In contrast to standard open-loop MCTS, CORAL achieves competitive performance compared with closed-loop algorithms while constructing significantly smaller search trees.",

url = "https://thomyphan.github.io/files/2025-aaai-preprint2.pdf",

eprint = "https://thomyphan.github.io/files/2025-aaai-preprint2.pdf",

location = "Philadelphia, PA, USA",

journal = "Proceedings of the AAAI Conference on Artificial Intelligence",

pages = "26651--26658",

doi = "https://doi.org/10.1609/aaai.v39i25.34867"

}

Related Articles

- T. Phan et al., “Truncated Counterfactual Learning for Anytime Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Anytime Multi-Agent Path Finding with an Adaptive Delay-Based Heuristic”, AAAI 2025

- T. Phan et al., “Adaptive Anytime Multi-Agent Path Finding using Bandit-Based Large Neighborhood Search”, AAAI 2024

- T. Phan et al., “Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning”, IJCAI 2019

- T. Phan et al., “Memory Bounded Open-Loop Planning in Large POMDPs Using Thompson Sampling”, AAAI 2019

- T. Gabor et al., “Subgoal-Based Temporal Abstraction in Monte-Carlo Tree Search”, IJCAI 2019

Relevant Research Areas