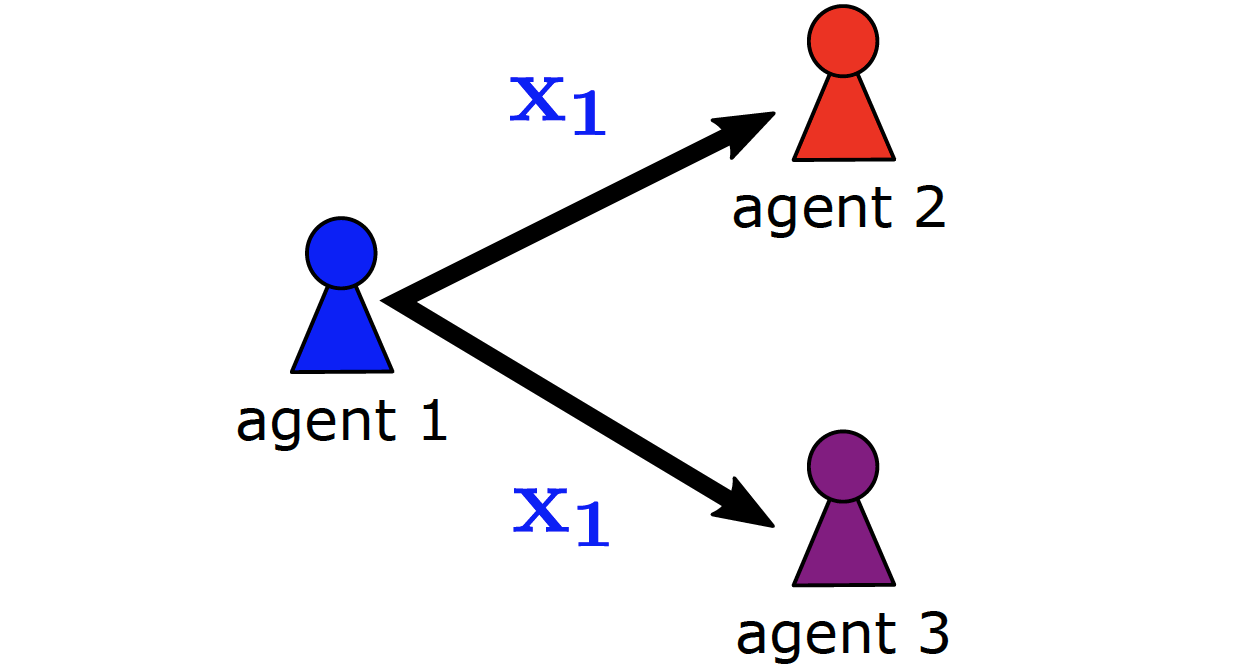

Stochastic partial observability poses a major challenge for decentralized coordination in multi-agent reinforcement learning but is largely neglected in state-of-the-art research due to a strong focus on state-based centralized training for decentralized execution (CTDE) and benchmarks that lack sufficient stochasticity like StarCraft Multi-Agent Challenge (SMAC). In this paper, we propose Attention-based Embeddings of Recurrence In multi-Agent Learning (AERIAL) to approximate value functions under stochastic partial observability. AERIAL replaces the true state with a learned representation of multi-agent recurrence, considering more accurate information about decentralized agent decisions than state-based CTDE. We then introduce MessySMAC, a modified version of SMAC with stochastic observations and higher variance in initial states, to provide a more general and configurable benchmark regarding stochastic partial observability. We evaluate AERIAL in Dec-Tiger as well as in a variety of SMAC and MessySMAC maps, and compare the results with state-based CTDE. Furthermore, we evaluate the robustness of AERIAL and state-based CTDE against various stochasticity configurations in MessySMAC.

@inproceedings{ phanICML23,

author = "Thomy Phan and Fabian Ritz and Philipp Altmann and Maximilian Zorn and Jonas Nüßlein and Michael Kölle and Thomas Gabor and Claudia Linnhoff-Popien",

title = "Attention-Based Recurrence for Multi-Agent Reinforcement Learning under Stochastic Partial Observability",

year = "2023",

abstract = "Stochastic partial observability poses a major challenge for decentralized coordination in multi-agent reinforcement learning but is largely neglected in state-of-the-art research due to a strong focus on state-based centralized training for decentralized execution (CTDE) and benchmarks that lack sufficient stochasticity like StarCraft Multi-Agent Challenge (SMAC). In this paper, we propose Attention-based Embeddings of Recurrence In multi-Agent Learning (AERIAL) to approximate value functions under stochastic partial observability. AERIAL replaces the true state with a learned representation of multi-agent recurrence, considering more accurate information about decentralized agent decisions than state-based CTDE. We then introduce MessySMAC, a modified version of SMAC with stochastic observations and higher variance in initial states, to provide a more general and configurable benchmark regarding stochastic partial observability. We evaluate AERIAL in Dec-Tiger as well as in a variety of SMAC and MessySMAC maps, and compare the results with state-based CTDE. Furthermore, we evaluate the robustness of AERIAL and state-based CTDE against various stochasticity configurations in MessySMAC.",

url = "https://proceedings.mlr.press/v202/phan23a.html",

eprint = "https://thomyphan.github.io/files/2023-icml-preprint.pdf",

location = "Hawaii, USA",

publisher = "PMLR",

booktitle = "Proceedings of the 40th International Conference on Machine Learning",

pages = "27840--27853",

keywords = "Dec-POMDP, stochastic partial observability, multi-agent learning, recurrence, self-attention",

}

Related Articles

- T. Phan et al., “Spatially Grouped Curriculum Learning for Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- T. Phan et al., “Confidence-Based Curriculum Learning for Multi-Agent Path Finding”, AAMAS 2024

- P. Altmann et al., “Challenges for Reinforcement Learning in Quantum Computing”, QCE 2024

- T. Phan et al., “Attention-Based Recurrency for Multi-Agent Reinforcement Learning under State Uncertainty”, AAMAS 2023 (short version)

- T. Phan et al., “VAST: Value Function Factorization with Variable Agent Sub-Teams”, NeurIPS 2021

- T. Phan, “Emergence and Resilience in Multi-Agent Reinforcement Learning”, PhD Thesis

Relevant Research Areas