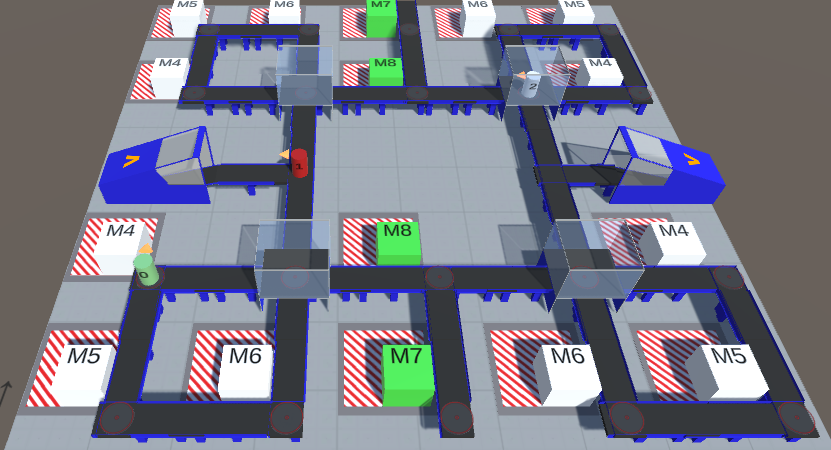

Our world represents an enormous multi-agent system (MAS), consisting of a plethora of agents that make decisions under uncertainty to achieve certain goals. The interaction of agents constantly affects our world in various ways, leading to the emergence of interesting phenomena like life forms and civilizations that can last for many years while withstanding various kinds of disturbances. Building artificial MAS that are able to adapt and survive similarly to natural MAS is a major goal in artificial intelligence as a wide range of potential real-world applications like autonomous driving, multi-robot warehouses, and cyber-physical production systems can be straightforwardly modeled as MAS. Multi-agent reinforcement learning (MARL) is a promising approach to build such systems which has achieved remarkable progress in recent years. However, state-of-the-art MARL commonly assumes very idealized conditions to optimize performance in best-case scenarios while neglecting further aspects that are relevant to the real world. In this thesis, we address emergence and resilience in MARL which are important aspects to build artificial MAS that adapt and survive as effectively as natural MAS do. We first focus on emergent cooperation from local interaction of self-interested agents and introduce a peer incentivization approach based on mutual acknowledgments. We then propose to exploit emergent phenomena to further improve coordination in large cooperative MAS via decentralized planning or hierarchical value function factorization. To maintain multi-agent coordination in the presence of partial changes similar to classic distributed systems, we present adversarial methods to improve and evaluate resilience in MARL. Finally, we briefly cover a selection of further topics that are relevant to advance MARL towards real-world applicability.

@phdthesis{ phanPhD23,

author = "Thomy Phan",

title = "Emergence and Resilience in Multi-Agent Reinforcement Learning",

year = "2023",

school = "LMU Munich",

type = "dissertation",

}

Featured Articles

- T. Phan et al., “Attention-Based Recurrence for Multi-Agent Reinforcement Learning under Stochastic Partial Observability”, ICML 2023

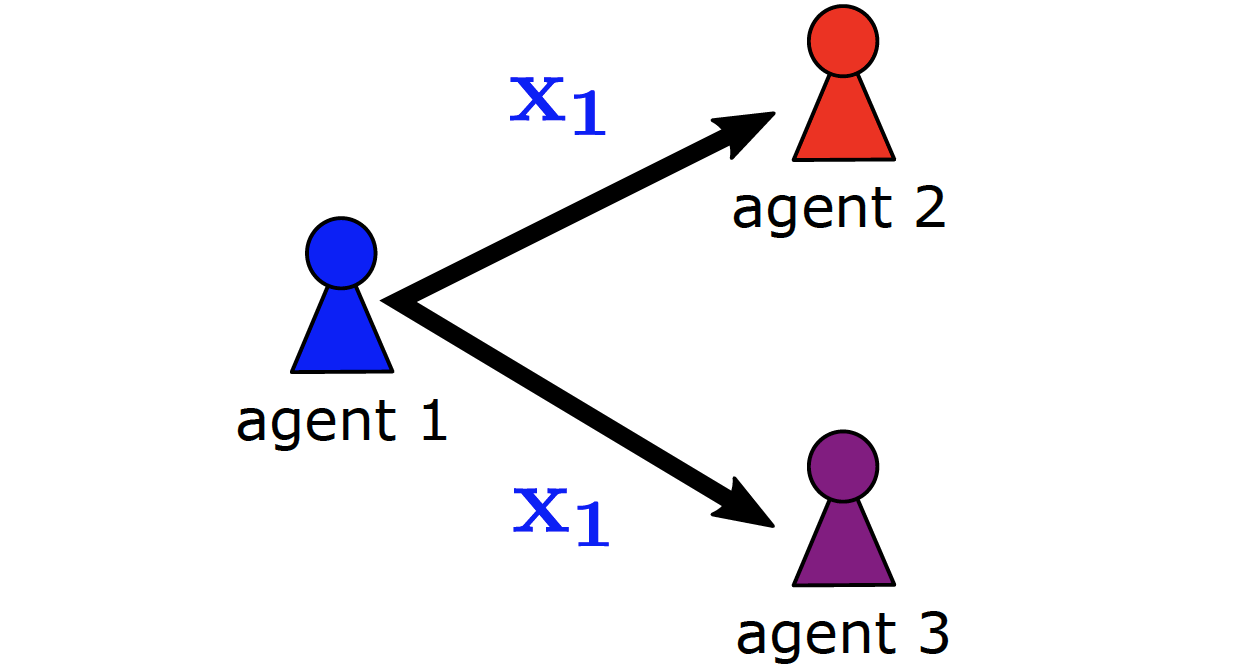

- T. Phan et al., “Emergent Cooperation from Mutual Acknowledgment Exchange”, AAMAS 2022

- T. Phan et al., “VAST: Value Function Factorization with Variable Agent Sub-Teams”, NeurIPS 2021

- T. Phan et al., “Resilient Multi-Agent Reinforcement Learning with Adversarial Value Decomposition”, AAAI 2021

- T. Phan et al., “Learning and Testing Resilience in Cooperative Multi-Agent Systems”, AAMAS 2020

- T. Phan et al., “A Distributed Policy Iteration Scheme for Cooperative Multi-Agent Policy Approximation”, ALA 2020

- T. Phan et al., “Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning”, IJCAI 2019

- T. Phan et al., “Distributed Policy Iteration for Scalable Approximation of Cooperative Multi-Agent Policies”, AAMAS 2019

- T. Phan et al., “Memory Bounded Open-Loop Planning in Large POMDPs Using Thompson Sampling”, AAAI 2019

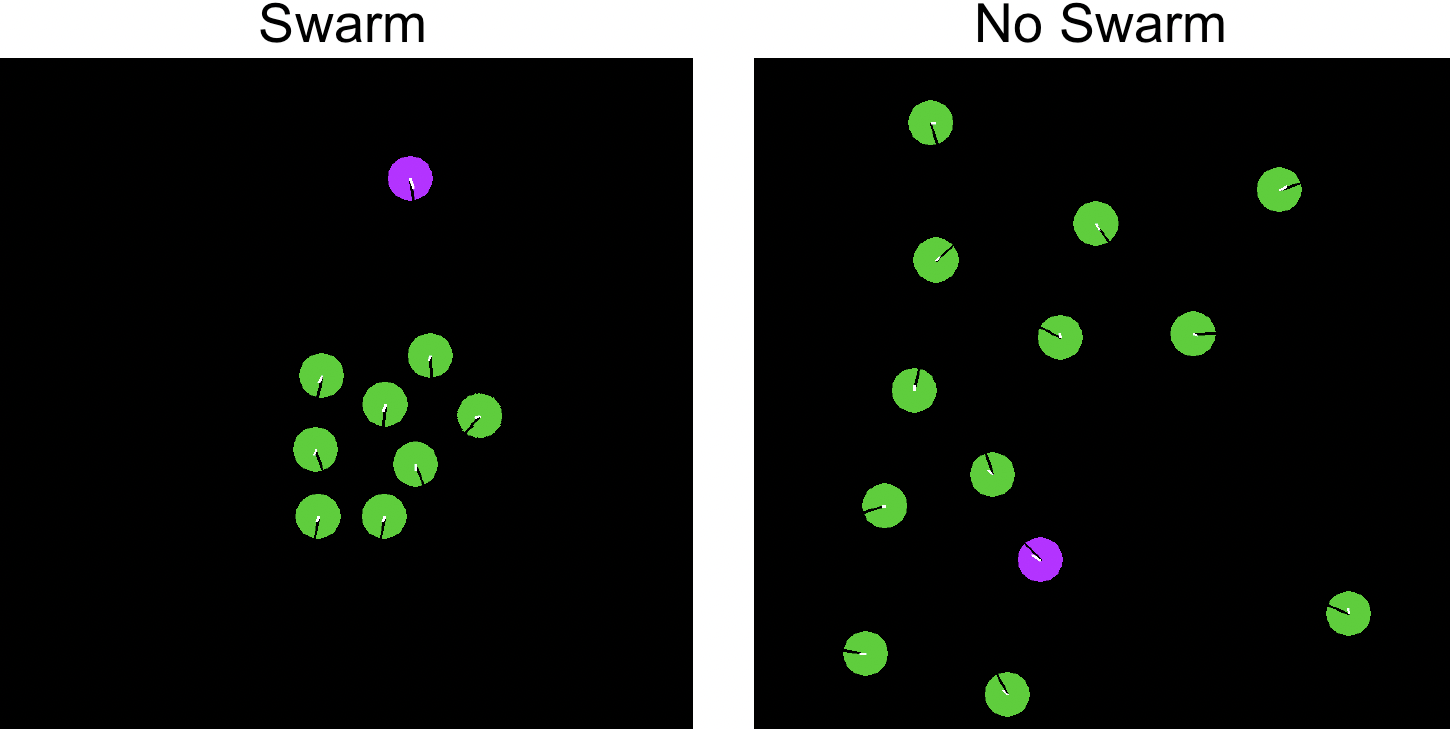

- C. Hahn et al., “Emergent Escape-based Flocking Behavior using Multi-Agent Reinforcement Learning”, ALIFE 2019

Relevant Research Areas