Adaptive Bi-Nonlinear Neural Networks Based on Complex Numbers with Weights Constrained along the Unit Circle

Felip Guimerà Cuevas and Thomy Phan and Helmut Schmid.The 27th Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD), pages 355--366, 2023.

[abstract] [bibtex] [doi] [pdf]

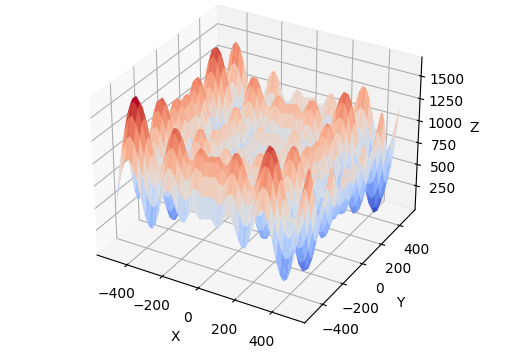

Traditional real-valued neural networks can suppress neural inputs by setting the weights to zero or overshadow other inputs by using extreme weight values. Large network weights are undesirable because they may cause network instability and lead to exploding gradients. To penalize such large weights, adequate regularization is typically required. This work presents a feed-forward and convolutional layer architecture that constrains weights along the unit circle such that neural connections can never be eliminated or suppressed by weights, ensuring that no incoming information is lost by dying neurons. The neural network's decision boundaries are redefined by expressing model weights as angles of phase rotations and layer inputs as amplitude modulations, with trainable weights always remaining within a fixed range. The approach can be quickly and readily integrated into existing layers while preserving the model architecture of the original network. The classification performance was tested and assessed on basic computer vision data sets using ShuffleNetv2, ResNet18, and GoogLeNet at high learning rates.

@inproceedings{ cuevasPAKDD23,

author = "Felip Guimerà Cuevas and Thomy Phan and Helmut Schmid",

title = "Adaptive Bi-Nonlinear Neural Networks Based on Complex Numbers with Weights Constrained along the Unit Circle",

year = "2023",

abstract = "Traditional real-valued neural networks can suppress neural inputs by setting the weights to zero or overshadow other inputs by using extreme weight values. Large network weights are undesirable because they may cause network instability and lead to exploding gradients. To penalize such large weights, adequate regularization is typically required. This work presents a feed-forward and convolutional layer architecture that constrains weights along the unit circle such that neural connections can never be eliminated or suppressed by weights, ensuring that no incoming information is lost by dying neurons. The neural network's decision boundaries are redefined by expressing model weights as angles of phase rotations and layer inputs as amplitude modulations, with trainable weights always remaining within a fixed range. The approach can be quickly and readily integrated into existing layers while preserving the model architecture of the original network. The classification performance was tested and assessed on basic computer vision data sets using ShuffleNetv2, ResNet18, and GoogLeNet at high learning rates.",

url = "https://link.springer.com/chapter/10.1007/978-3-031-33374-3_28",

eprint = "https://link.springer.com/chapter/10.1007/978-3-031-33374-3_28",

location = "Osaka, Japan",

publisher = "Springer Nature Switzerland",

booktitle = "The 27th Pacific-Asia Conference on Knowledge Discovery and Data Mining",

pages = "355--366",

keywords = "deep learning",

doi = "https://doi.org/10.1007/978-3-031-33374-3_28"

}

Related Articles

- Zorn et al., “Social Neural Network Soups with Surprise Minimization”, ALIFE 2023