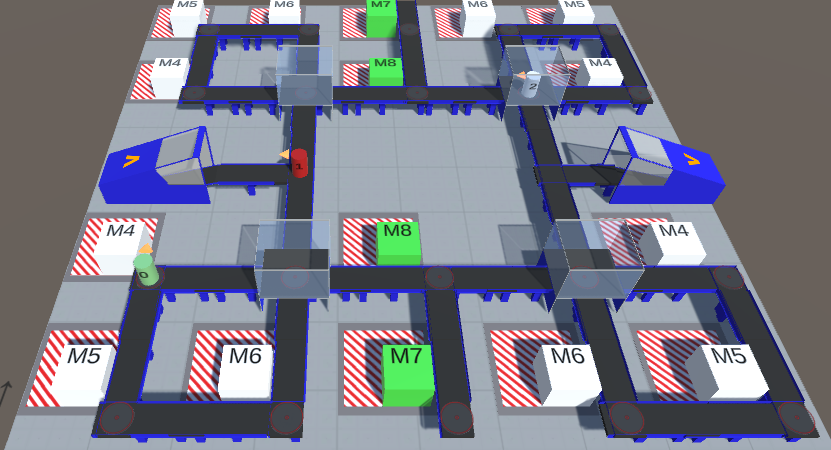

Systems are becoming increasingly more adaptive, using techniques like machine learning to enhance their behavior on their own rather than only through human developers programming them. We analyze the impact the advent of these new techniques has on the discipline of rigorous software engineering, especially on the issue of quality assurance. To this end, we provide a general description of the processes related to machine learning and embed them into a formal framework for the analysis of adaptivity, recognizing that to test an adaptive system a new approach to adaptive testing is necessary. We introduce scenario coevolution as a design pattern describing how system and test can work as antagonists in the process of software evolution. While the general pattern applies to large-scale processes (including human developers further augmenting the system), we show all techniques on a smaller-scale example of an agent navigating a simple smart factory. We point out new aspects in software engineering for adaptive systems that may be tackled naturally using scenario coevolution. This work is a substantially extended take on Gabor et al. (International symposium on leveraging applications of formal methods, Springer, pp 137–154, 2018)

@article{ gaborSTTT20,

author = "Thomas Gabor and Andreas Sedlmeier and Thomy Phan and Fabian Ritz and Marie Kiermeier and Lenz Belzner and Bernhard Kempter and Cornel Klein and Horst Sauer and Reiner Schmid and Jan Wieghardt and Marc Zeller and Claudia Linnhoff-Popien",

title = "The Scenario Coevolution Paradigm: Adaptive Quality Assurance for Adaptive Systems",

year = "2020",

abstract = "Systems are becoming increasingly more adaptive, using techniques like machine learning to enhance their behavior on their own rather than only through human developers programming them. We analyze the impact the advent of these new techniques has on the discipline of rigorous software engineering, especially on the issue of quality assurance. To this end, we provide a general description of the processes related to machine learning and embed them into a formal framework for the analysis of adaptivity, recognizing that to test an adaptive system a new approach to adaptive testing is necessary. We introduce scenario coevolution as a design pattern describing how system and test can work as antagonists in the process of software evolution. While the general pattern applies to large-scale processes (including human developers further augmenting the system), we show all techniques on a smaller-scale example of an agent navigating a simple smart factory. We point out new aspects in software engineering for adaptive systems that may be tackled naturally using scenario coevolution. This work is a substantially extended take on Gabor et al. (International symposium on leveraging applications of formal methods, Springer, pp 137–154, 2018)",

url = "https://link.springer.com/article/10.1007/s10009-020-00560-5",

eprint = "https://epub.ub.uni-muenchen.de/73060/1/Gabor2020_Article_TheScenarioCoevolutionParadigm.pdf",

publisher = "Springer",

journal = "International Journal on Software Tools for Technology Transfer",

pages = "457--476",

doi = "https://doi.org/10.1007/s10009-020-00560-5"

}

Related Articles

- T. Phan et al., “Spatially Grouped Curriculum Learning for Multi-Agent Path Finding”, AAAI 2026

- T. Phan et al., “Generative Curricula for Multi-Agent Path Finding via Unsupervised and Reinforcement Learning”, JAIR 2025

- F. Ritz et al., “Capturing Dependencies Within Machine Learning via a Formal Process Model”, ISoLA 2022

- T. Phan et al., “Resilient Multi-Agent Reinforcement Learning with Adversarial Value Decomposition”, AAAI 2021

- T. Gabor et al., “The Holy Grail of Quantum Artificial Intelligence: Major Challenges in Accelerating the Machine Learning Pipeline”, QSE 2020

- T. Phan et al., “Learning and Testing Resilience in Cooperative Multi-Agent Systems”, AAMAS 2020

- T. Gabor et al., “Scenario Co-Evolution for Reinforcement Learning on a Grid World Smart Factory Domain”, GECCO 2019

Relevant Research Areas