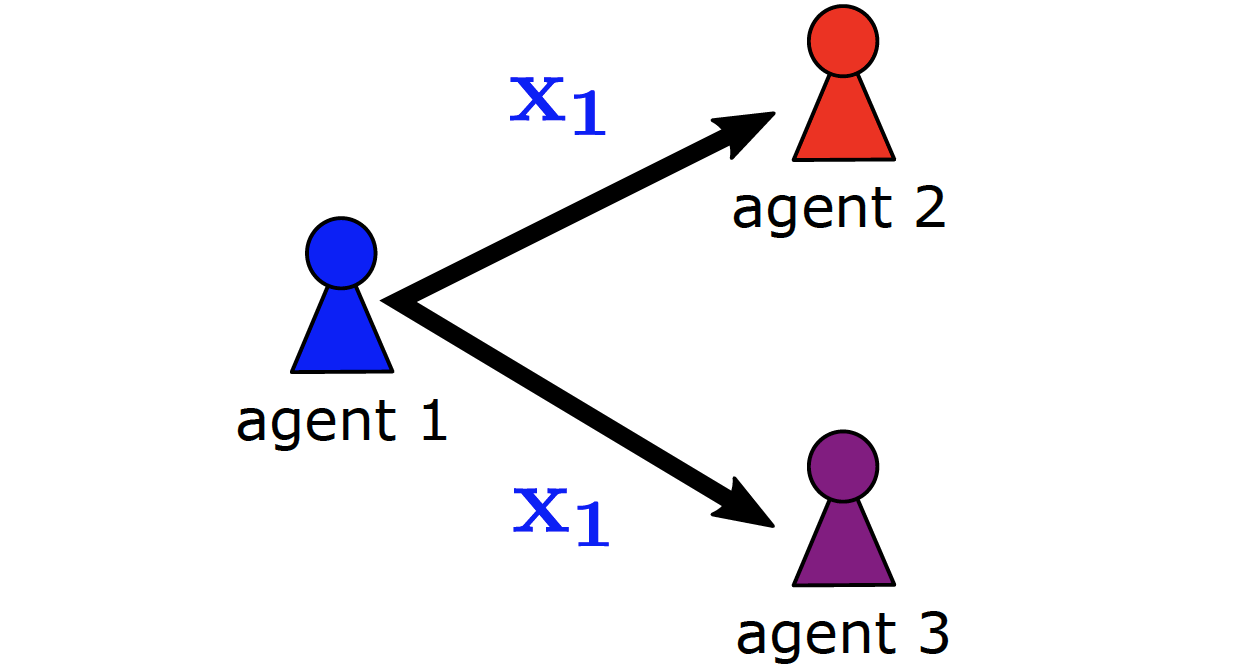

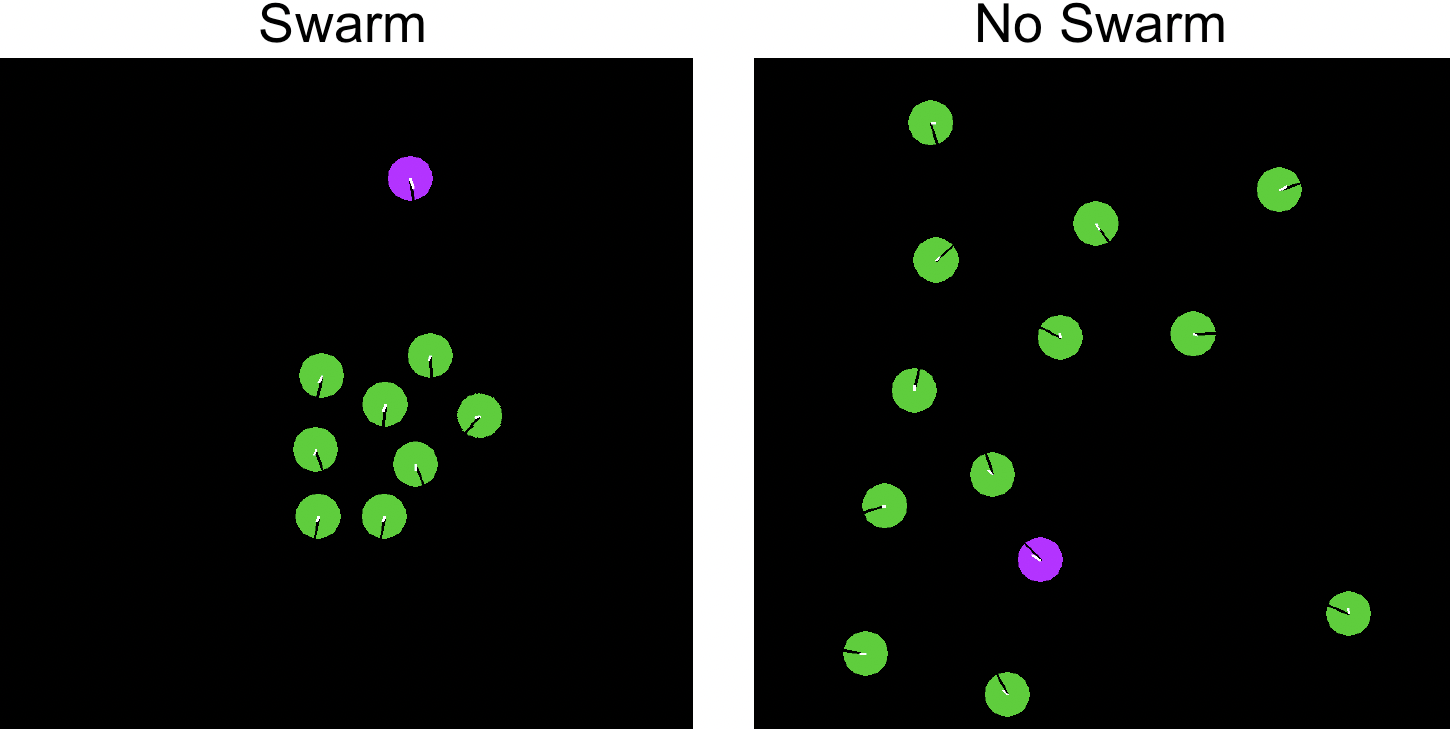

Peer incentivization (PI) is a recent approach where all agents learn to reward or penalize each other in a distributed fashion, which often leads to emergent cooperation. Current PI mechanisms implicitly assume a flawless communication channel in order to exchange rewards. These rewards are directly incorporated into the learning process without any chance to respond with feedback. Furthermore, most PI approaches rely on global information, which limits scalability and applicability to real-world scenarios where only local information is accessible. In this paper, we propose Mutual Acknowledgment Token Exchange (MATE), a PI approach defined by a two-phase communication protocol to exchange acknowledgment tokens as incentives to shape individual rewards mutually. All agents condition their token transmissions on the locally estimated quality of their own situations based on environmental rewards and received tokens. MATE is completely decentralized and only requires local communication and information. We evaluate MATE in three social dilemma domains. Our results show that MATE is able to achieve and maintain significantly higher levels of cooperation than previous PI approaches. In addition, we evaluate the robustness of MATE in more realistic scenarios, where agents can deviate from the protocol and communication failures can occur. We also evaluate the sensitivity of MATE w.r.t. the choice of token values.

@inproceedings{ phanJAAMAS2024,

author = "Thomy Phan and Felix Sommer and Fabian Ritz and Philipp Altmann and Jonas Nüßlein and Michael Kölle and Lenz Belzner and Claudia Linnhoff-Popien",

title = "Emergent Cooperation from Mutual Acknowledgment Exchange in Multi-Agent Reinforcement Learning",

year = "2024",

abstract = "Peer incentivization (PI) is a recent approach where all agents learn to reward or penalize each other in a distributed fashion, which often leads to emergent cooperation. Current PI mechanisms implicitly assume a flawless communication channel in order to exchange rewards. These rewards are directly incorporated into the learning process without any chance to respond with feedback. Furthermore, most PI approaches rely on global information, which limits scalability and applicability to real-world scenarios where only local information is accessible. In this paper, we propose Mutual Acknowledgment Token Exchange (MATE), a PI approach defined by a two-phase communication protocol to exchange acknowledgment tokens as incentives to shape individual rewards mutually. All agents condition their token transmissions on the locally estimated quality of their own situations based on environmental rewards and received tokens. MATE is completely decentralized and only requires local communication and information. We evaluate MATE in three social dilemma domains. Our results show that MATE is able to achieve and maintain significantly higher levels of cooperation than previous PI approaches. In addition, we evaluate the robustness of MATE in more realistic scenarios, where agents can deviate from the protocol and communication failures can occur. We also evaluate the sensitivity of MATE w.r.t. the choice of token values.",

url = "https://link.springer.com/article/10.1007/s10458-024-09666-5",

eprint = "https://link.springer.com/content/pdf/10.1007/s10458-024-09666-5.pdf",

publisher = "Springer Nature",

booktitle = "Autonomous Agents and Multi-Agent Systems",

keywords = "multi-agent learning, reinforcement learning, mutual acknowledgments, peer incentivization, emergent cooperation",

doi = "https://doi.org/10.1007/s10458-024-09666-5"

}

Related Articles

- M. Koelle et al., “Multi-Agent Quantum Reinforcement Learning using Evolutionary Optimization, ICAART 2024

- T. Phan et al., “Emergent Cooperation from Mutual Acknowledgment Exchange”, AAMAS 2022 (conference version)

- L. Belzner et al., “The Sharer’s Dilemma in Collective Adaptive Systems of Self-Interested Agents”, ISoLA 2018

- K. Schmid et al., “Action Markets in Deep Multi-Agent Reinforcement Learning”, ICANN 2018

- T. Phan, “Emergence and Resilience in Multi-Agent Reinforcement Learning”, PhD Thesis

Relevant Research Areas