Learning-Based Search

Machine Learning-Guided Search for Multi-Agent Path Finding

Heuristic search is important for a variety of real-world applications such as routing, robot motion planning, and multi-agent coordination. Hand-crafted heuristics are often used to exploit structural properties of the underlying problem to speed up the search.

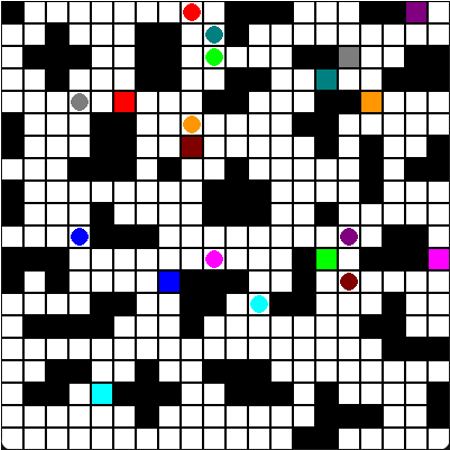

We currently focus on multi-agent path finding (MAPF) as a particularly challenging search domain and use machine learning to guide heuristic search algorithms via online adaptation [1,2,3]. We also develop simple curriculum approaches to learn fast policies for highly constraint environments [4,5,6].

Publications:

[1] Adaptive Anytime Multi-Agent Path Finding

[2] Adaptive Delay-Based Multi-Agent Path Finding

[3] Counterfactual Adaption in Anytime Multi-Agent Path Finding

[4] Curriculum Learning for Multi-Agent Path Finding

[5] Generative Curricula for Multi-Agent Path Finding

[6] Spatial Curricula for Multi-Agent Path Finding

Multi-Agent Planning and Learning

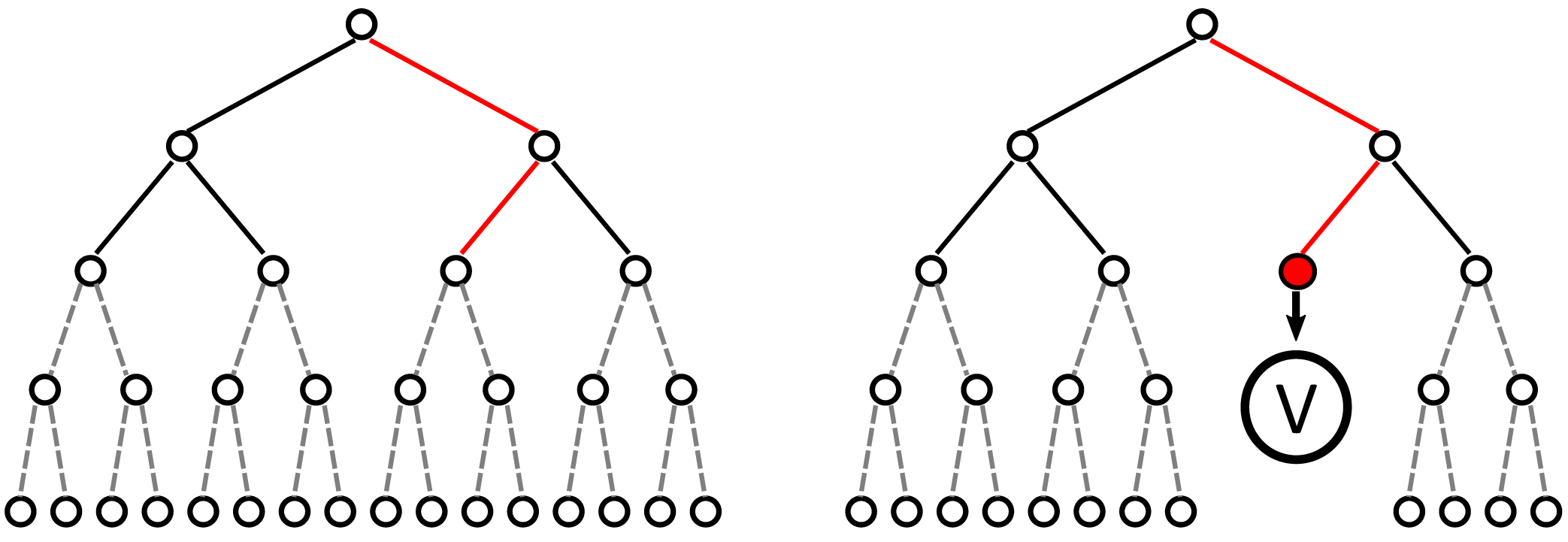

Cooperative MARL commonly exploits global information like states and joint actions during training to produce coordinated strategies for decentralized decision making. However, emergent phenomena can dynamically occur in various forms and levels which are difficult to deduce from mere states and joint actions therefore limiting performance and scalability in large-scale domains.

We propose to explicitly consider emergent behavior via distributed Monte Carlo planning to overcome these limitations. To support the distributed planning process, we provide learned value [1] and policy functions [2] to narrow down the search beam for improved efficiency.

Publications:

[1] Value Function Approximation for Distributed Planning

[2] Distributed Planning for Multi-Agent Policy Approximation