Resilience in Autonomous Systems

Scenario Coevolution

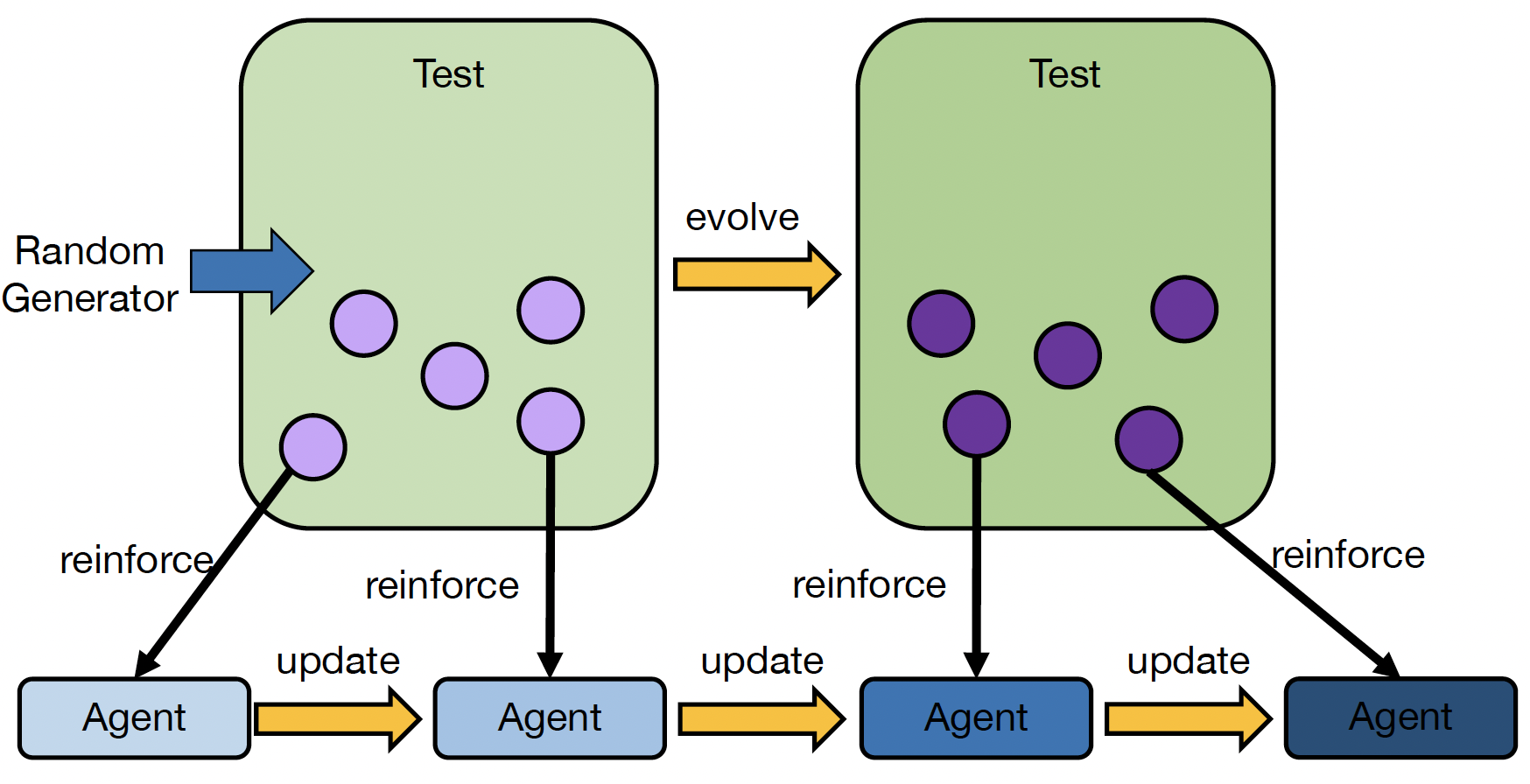

RL agents are commonly trained under idealized condition, assuming a stationary environment. In complex domains, such agents are typically vulnerable to rare events that are not (frequently) encountered during training, potentially performing poorly or causing catastrophic failure. To learn resilient behavior, the RL agent needs to be exposed to such rare events more often during training.

We devise coevolutionary RL algorithms, where the environment is modeled as an adversary and optimized with evolutionary algorithms to minimize the original RL objective. The adversarial environment can be used to train resilient agents [1] and evolve in an open-ended manner to provide adaptive test cases for continuously evolving systems [2].

Publications:

[1] Coevolutionary Reinforcement Learning

[2] Adaptive Quality Assurance

Resilience in Multi-Agent Systems

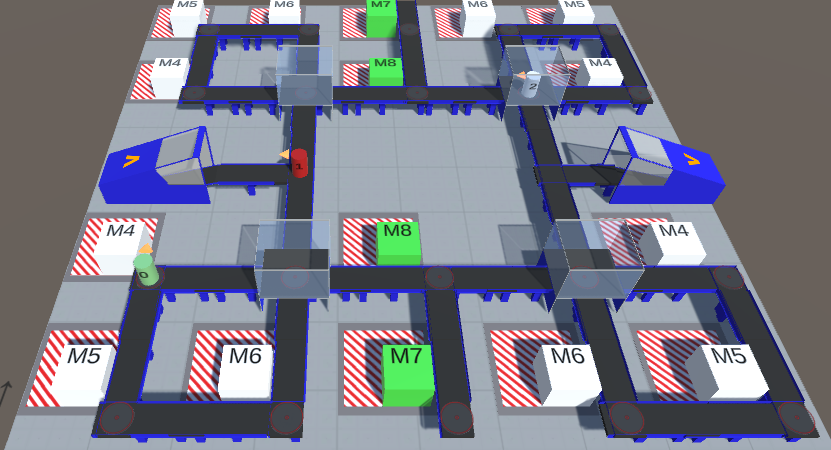

Cooperative multi-agent RL (MARL) agents are commonly trained under idealized condition, assuming that agents will always exhibit cooperative behavior. However, (partial) adversarial behavior may occur nevertheless due to flaws in hardware and software, potentially leading to catastrophic failure. Thus, even cooperative multi-agent system (MAS) need to be prepared for adversarial change as each agent may be a potential source of failure.

We devise adversarial algorithms to improve resilience in MAS. During training, we randomly replace productive agents by antagonists to expose the system to partial adversarial change [1]. We also devise evaluation methods to compare resilience of different MARL algorithms in a fair way [2].

Publications:

[1] Antagonist-Based Learning

[2] Adversarial Value Decomposition