Emergence in Multi-Agent Systems

Emerging Swarms

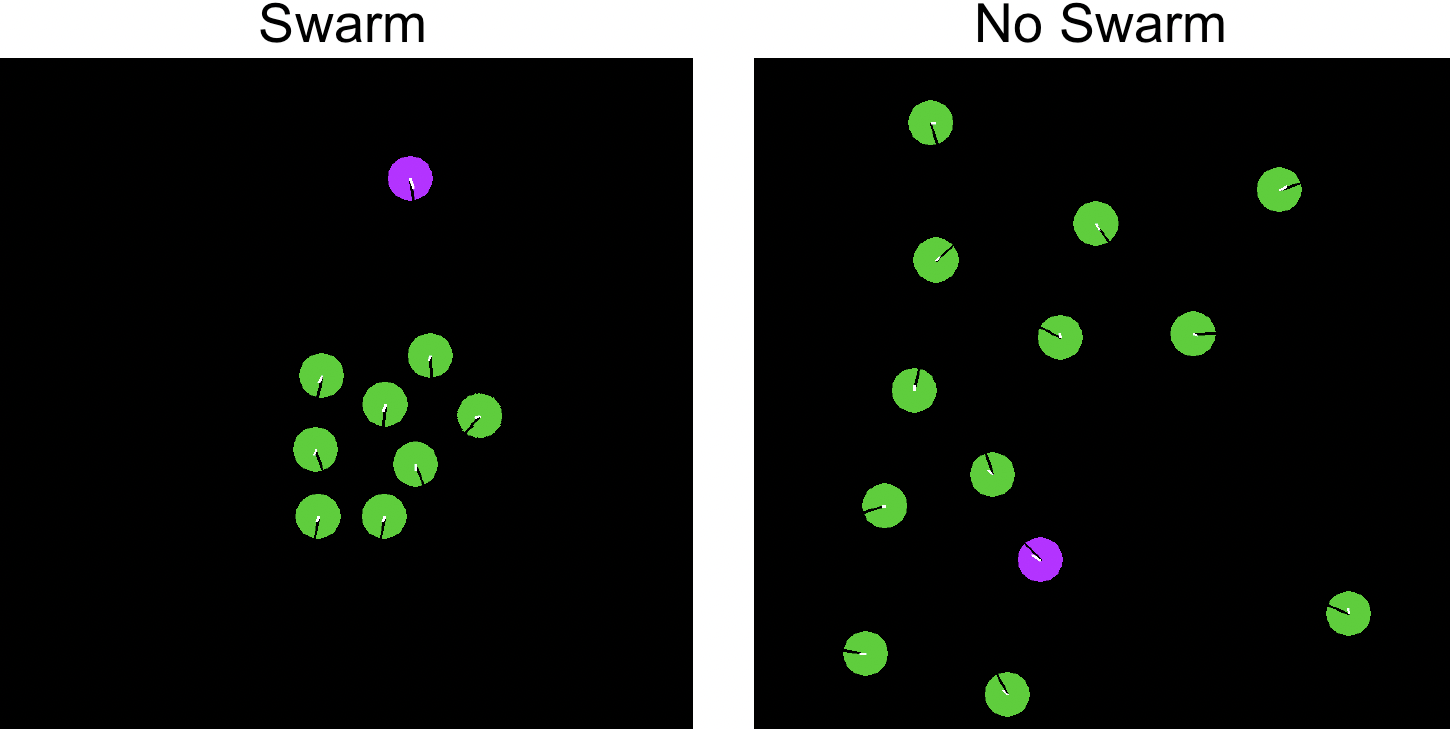

Animals like birds and fishes tend to form swarms to collectively forage or protect each other from predators. Swarms emerge from local interaction of thousands of individuals without a centralized controller. Due to the vast scale of such systems, the emergence and characteristics of swarms are hard to interpret and anticipate.

Using reinforcement learning (RL) techniques, we can study and quantify the effect of emerging swarms under controlled conditions. Therefore, we employ a large-scale predator-prey simulation, where all preys must learn to survive independently [1]. We also adapt the simulation to foraging scenarios, where multiple agents independently search and follow some target under partial observability [2]. In all cases, we observe the emergence of swarms without explicit incentivization through rewards.

Publications:

[1] Emergent Flocking

[2] Foraging Swarms

Emergent Sustainability

Common resources can lead to greedy behavior in self-interested multi-agent system (MAS), where agents learn to deplete all resources without any chance for regeneration. Such behavior known as tradegy of the commons is not desirable for real-world applications like autonomous driving or network communication, where limited resources should be used in a sustainable way.

We study different environment factors of existing domains, e.g., the predator-prey domain mentioned above, to enable the emergence of sustainable behavior in self-interested MAS [1]. We also evaluate how the number of self-interested agents affects emergent sustainability [2].

Furthermore, we study the emergence of stability in neural artificial chemistry systems, where several neural network evolve via local interaction, self-replication, and surprise minimization [3].

Publications:

[1] Ecosystem Management

[2] Multi-Agent Ecosystem Management

[3] Neural Artificial Chemistry Systems

Emergent Cooperation

As self-learning agents become more and more omnipresent in the real world, they will inevitably learn to interact with each other. In self-interested MAS, conflict and competition may arise due to opposing goals or shared resources. Naive RL approaches commonly fail to cooperate in such scenarios, leading to undesirable emergent behavior.

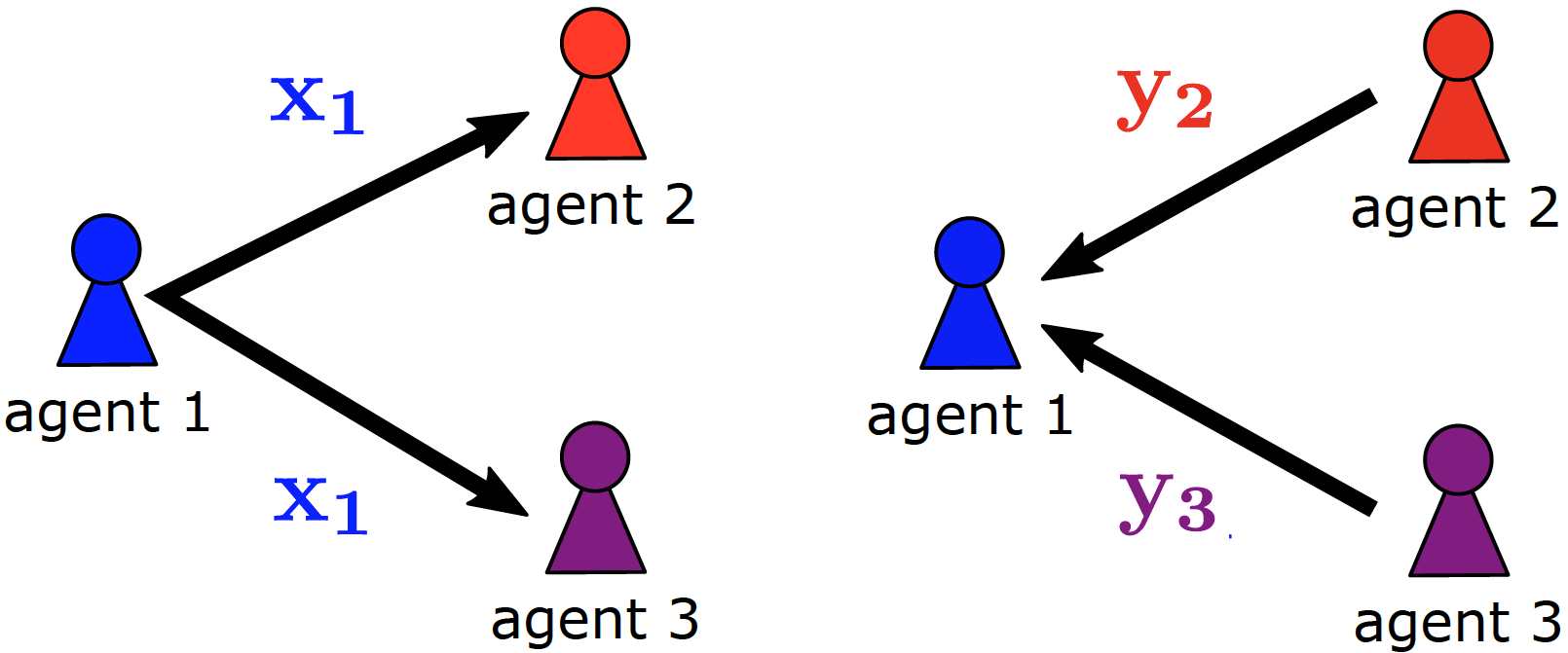

To incentivize cooperative behavior in self-interested MAS, we study decentralized mechanisms, where agents learn to reward or penalize each other. These mechanisms are based on market theory [1] or social behavior like acknowledgments [2,3]. We also consider real-world aspects like defectors, locality of information, and noisy communication [2,3].

Publications:

[1] Action Markets

[2] Mutual Acknowledgment Exchange

[3] Reciprocal Peer Incentivization