Dependability in Machine Learning Systems

Anomaly Detection

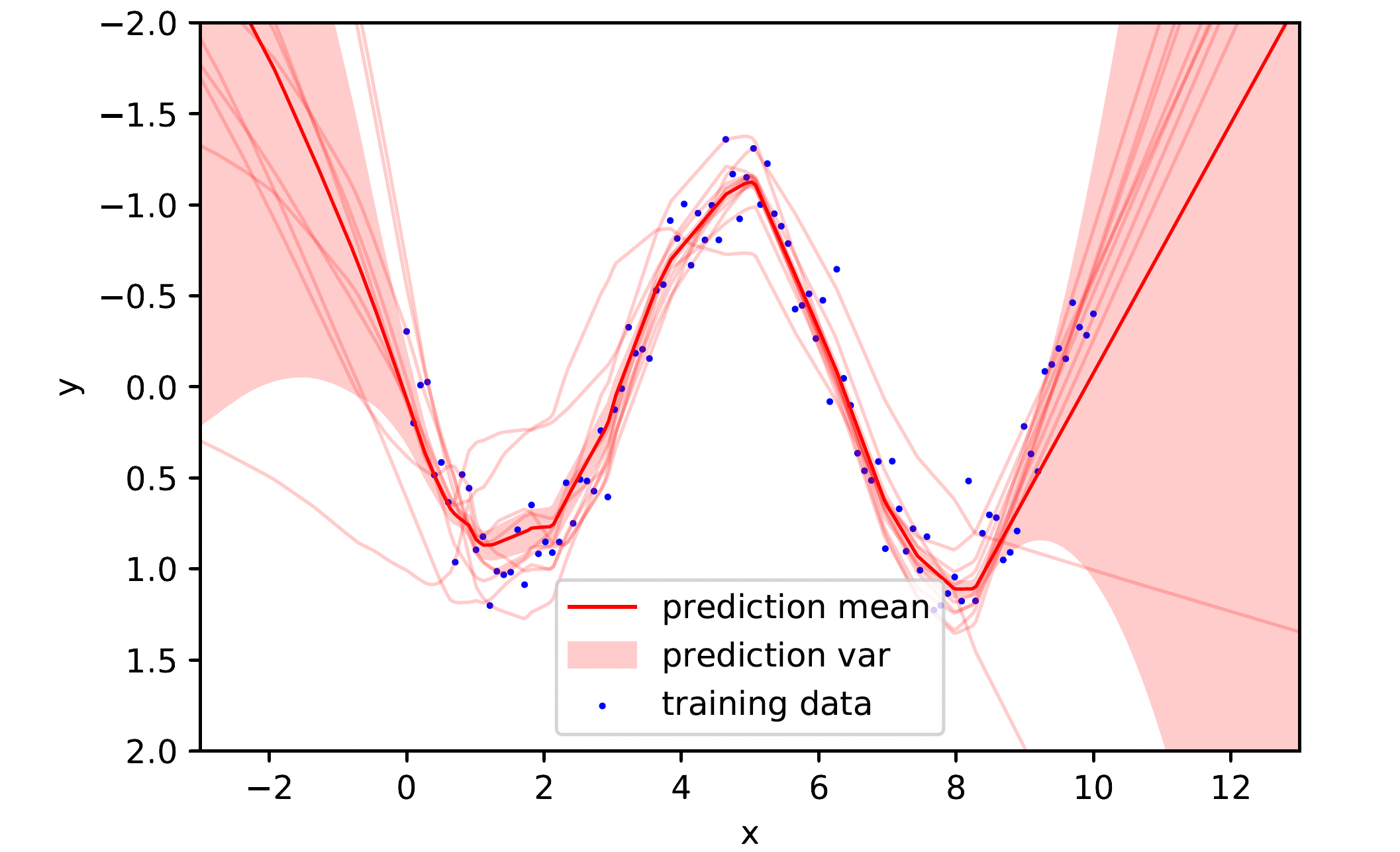

Generalization is an important concern in reinforcement learning (RL) since agents are typically trained and evaluated in a simulation with limited scope. As the real-world is complex and messy, it is likely that RL agents will encounter novel situations which are different from samples seen during training. Such anomalies or out-of-distribution samples can cause undesired behavior thus need to be considered carefully in safety-critical domains.

We investigate methods to measure an agent’s uncertainty regarding a particular situation which can be used for monitoring or as warning system [1,2]. We also regard anomaly detection in a wider scope proposing desiderata for future problems like modeling of normalcy, absence of rewards during deployment, suitable response strategies, etc. [3]. Finally, we work on methods to improve generalization and cope with domain shifts [4,5].

Publications:

[1] Uncertainty-Based Detection

[2] Out-of-Distribution Classification Methods

[3] Anomaly Detection in Reinforcement Learning

[4] Discriminative Reward Co-Training

[5] Compact Reshaped Observation Processing

Specification Awareness

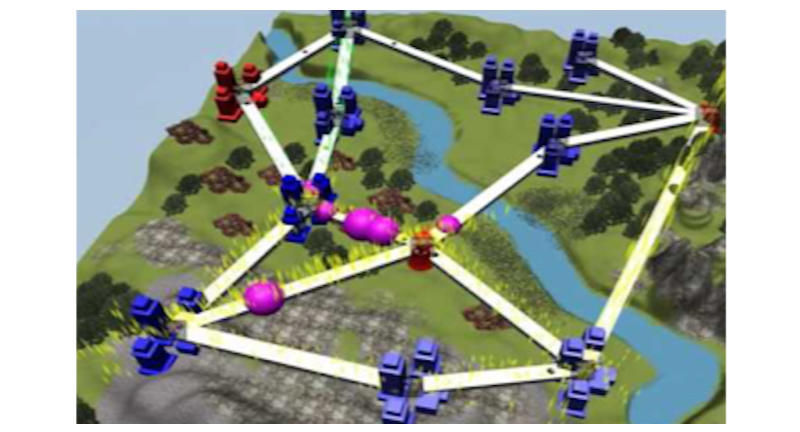

RL agents are often trained and evaluated with respect to a single objective. In the real-world, a system typically has to comply with a specification consisting of several functional and non-functional requirements though. These requirements may be aligned or in conflict with the original objective. Therefore, a tradeoff solution is needed which is hard to find with classic RL methods that preferrably stick with easy requirements while neglecting difficult ones.

We investigate such solutions by proposing specification-aware (multi-agent) RL which attempts to comply with several requirements in a smart factory scenario [1,2].

Publications:

[1] Specification Aware Learning

[2] Specification Aware Multi-Agent Training

Engineering and Operation

Similar to software and system engineering, machine learning (ML) requires suitable tools beyond mere data for proper design, validation, monitoring, root cause analysis, etc. to build and operate dependable systems. Such tools would help to improve safety, trust, and acceptance to deploy such systems in the real-world.

We develop process models and paradigms to enable an organized approach to engineering and operating dependable ML systems. We address adaptive testing for continuously evolving systems through coevolution [1], a generic engineering process with ML specific artefacts [2], and self-adaptive operations to (partially) automate engineering and operation.

Publications:

[1] Scenario Coevolution Paradigm

[2] Process Model for ML Engineering

[3] Self-Adaptive Engineering of ML Systems